“Business is not just doing deals; business is having great products, doing great engineering, and providing tremendous service to customers. Finally, business is a cobweb of human relationships.”

H. Ross Perot

Abstract

In the past decade, the focus of information technology (IT) development has been on service-oriented architecture, especially the new service delivery model, Software-as-a-Service (SaaS). Accordingly, interest in quality management in the planning and operation of SaaS systems has increased tremendously. In practice, it is necessary to take into greater account the nature of service quality shared by both service provider and customer in the SaaS delivery.

This paper introduces a study on a theory that integrates the service quality and value co-creation (co-value) in the SaaS business relationships between service provider and customer. The theory is established, in part, based on the results of a survey of Chief Information Officers (CIOs) that shows a strong correspondence between the service quality required or desired by a client and the business relationship needed between SaaS clients and providers. We have used the theory as the foundation for an approach and tool for evaluating SaaS applications.

Introduction

This article examines the effect of service quality on business relationships between clients and SaaS service providers. To date, most of the focus from both business and research perspectives has concentrated on how the provider of SaaS services can deliver services that meet their advertised service objectives and that are predominantly performance-based. Most SaaS customers are relegated to a “take it or leave it” situation with respect to many important service quality factors such as usability, sustainability, or adaptability of a service offering. To be competitive in the future, SaaS vendors will need to be more flexible with clients’ service quality needs and seek out co-value approaches in their business relationships with clients. This work recognizes this direction by developing a theory that integrates service quality and value co-creation (co-value) in the SaaS business relationships between service provider and customer.

Quality Management in SaaS Business Relationships

The notion of service quality considered in our research is derived from the following three perspectives:

-

Conformance Quality: conformance to specifications

-

Gap Quality: whether customer expectations are met or exceeded

-

Value Quality: the direct benefits (value) to the customer

From the view of service providers, both Conformance Quality and Gap Quality measures are managed as part of their business relationship with customers. The focus on Conformance Quality aspects, typically expressed in the service level agreements (SLAs), is initially determined in the provider organization, often involving marketing, sales, and production units. The Gap Quality concerns, commonly determined by the provider using survey tools involving the customers, assist in determining the gap between what customers expect from a service when compared to what the provider is delivering.

From the view of service customers, Functional Needs and Value Quality are managed as part of their business relationship with providers. The Functional Needs express the user requirements for supporting their workplace activities in the customer organization. The Value Quality measures, such as ROI and risk analysis, capture the value the customer organization places on deploying a service using a SaaS.

Ideally, both the SaaS provider and customer continue to seek ways of maintaining a “win-win” business relationship where new or added co-value is continually being created for a service offering. Therefore, a major factor affecting the SaaS business relationship is a clear understanding of the co-value present in the service offerings.

Specification of Quality-Based SaaS Business Relationships

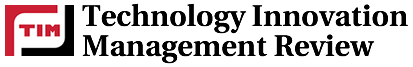

By integrating a quality management approach with co-value in SaaS business relationship, we can produce a specification of SaaS business relationships and illustrate its features using existing SaaS applications. The specification prescribes four service types based on the maturity levels of business relationships between service provider and customer. These service types are summarized in Table 1, in which four service types are prescribed based on the maturity levels of business relationships between the service provider and customer, which are called Ad-hoc, Defined, Managed, and Strategic.

Table 1. Four Service Types in SaaS Business Relationships

We have a strong belief that quality measures play an increasing role in SaaS business relationships. Based on this belief and towards a theory of SaaS business relationships, we establish the following conjecture:

-

The primary service attribute of interest in an Ad-hoc Service is functionality.

-

The primary service attributes of interest in a Defined Service are those measured by Conformance Quality approaches.

-

The primary service attributes of interest in a Managed Service are those measured by Gap Quality approaches.

-

The primary service attributes of interest in a Strategic Service are those measured by Value Quality approaches.

Survey Approach: Validating the Theory on Service Attributes

To assist in validating the conjecture of our theory, we conducted a web-based, on-line survey involving primarily CIOs from twenty commercial, governmental and academic organizations in the local areas. This survey was intended to capture the service customer's general view on the twelve typical service attributes in the selection and monitoring of SaaS services. The survey results were analyzed and used to confirm or refute our conjecture relating to SaaS business relationship.

In July 2009, we sent an invitation letter by email to the CIOs of 70 commercial, governmental, and academic organizations from Edmonton and Calgary areas to ask for participation in the survey, and initially we received 30 positive responses. We then sent a second invitation letter to the 30 CIOs and directed them to a web-based online survey. At the end of August 2009, we received answers from 20 CIOs, 10 of which were willing to participate in a follow-up study should we wish to conduct one. To explore in greater detail some aspects of SaaS, we did a brief follow up questionnaire study in September 2009 with these 10 CIOs. Seven of the 10 CIOs responded and the result of this follow up study will be described later in this section.

In the Generic Survey, 19 questions were asked in the following six sections:

-

Background information: questions about the background of the customer organization, such as size and nature of market focus, and respondent’s role in the organization.

-

Use of external IT services/SaaS services: questions about the use of external IT services in the customer organization.

-

Service attributes: questions about the priority of certain service attributes considered by the customer decision-maker (typically CIOs) when planning the use of IT services/SaaS services in four service types.

-

IT service governance: questions addressing the issues of IT governance strategy used in the customer organization and how a SaaS evaluation model might support the organization’s IT governance approach.

-

Strategic planning of IT: questions about how the customer takes the external IT services and SaaS services into account in strategic planning.

-

Use of personal web-based services: questions about the impact of personal web-based services, such as eBay, Wikipedia, Google Maps, Facebook and Youtube, on IT-services planning in the customer organization.

In this article, we only focus on the first three sections of the survey that are related to our analysis on service attributes with respect to the four service types, especially section 3 (service attributes). We asked respondents to select the best estimate of the priority of eight typical service attributes for each of the four service types defined earlier. We used a 5 point scale for the priority, where 5 stands for “high”, 3 stands for “medium,” and 1 stands for “low”. Therefore, if a priority of 5 is selected, this indicates that the respondent would rate this service attribute as high when making decision about selecting a SaaS system. To extend our study to other five service attributes related to the business planning such as ROI and risk, we asked the participants of the follow-up study to select a priority for five additional service attributes, using the same scale system.

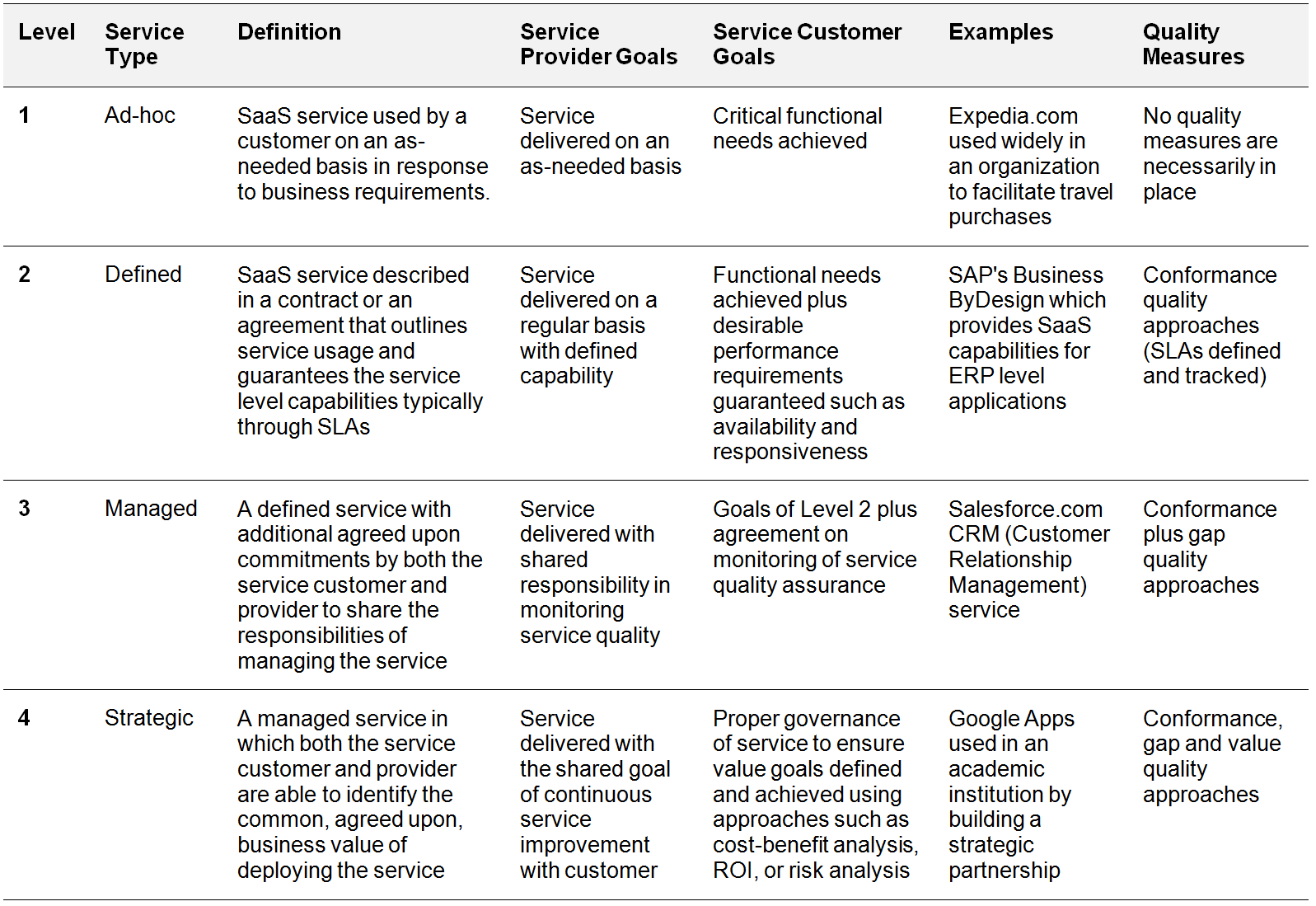

To categorize the service attributes, the most intuitive way is to calculate and compare the mean values of the priority. However, analysis on the mean values may not reflect the relative priorities of service attributes. Instead of using the mean value for the analysis, we calculate the relative importance for service attributes in the four service types. The relative importance of a service attribute in a service type is defined as the percentage of population that consider the priority of that service attribute in that service type higher than or equal to all the other three service types. For example, if 18 out of 20 respondents rank the priority of Security in the Defined Service the highest over the four service types, the relative importance of Security in the Defined Service is equal to 90%. By comparing the relative importance, we avoid the difference of rating standards between individual participants. The relative importance values calculated from the survey results are shown in Table 2.

Table 2. Summary of Relative Importance in the Four Service Types

The stair-like shaded areas in Table 2 strongly support our conjecture. When the business relationship intensifies from Ad-hoc to Strategic, the service customer should use progressively more types of quality approaches to manage the service quality. The only two outliers in the grouping results are usability, which is typically measured by a gap quality approach (surveys) on the customer experience, and cost, which is directly measured by a value quality approach (monetary value). From the comments from the survey respondents, we conjecture that the reason for the misplacement of usability may be caused by the confusion with user capability of a system, which is considered as part of functionality by our definition. Both outliers need to be further investigated in future, more extensive, and more intensive studies.

With the two outliers adjusted, the service attribute groups are consistent with the types of quality measures:

-

Functionality is the basic operational attribute required whenever a service is delivered successfully (i.e. Ad-hoc, Defined, Managed, and Strategic Service).

-

Conformance quality attributes (Security, Availability, and Reliability) are measured by conformance quality approaches and typically are required when a service is delivered as a Defined, Managed, and Strategic Service.

-

Gap quality attributes (Usability, Efficiency, Sustainability, and Adaptability) are measured by gap quality approaches and are typically required when a service is delivered as a Managed and Strategic Service. Gap quality attributes take into account more perspective from service customers.

-

Value quality attributes (Cost, ROI, Risk, Continuity, and CSI) are measured by value quality approaches and are typically required when a service is delivered as a Strategic Service. In this sense, the value quality attributes are the most closely aligned with the business strategic objectives of both the service customer and provider.

Defining and Using the SaaS Evaluation Model

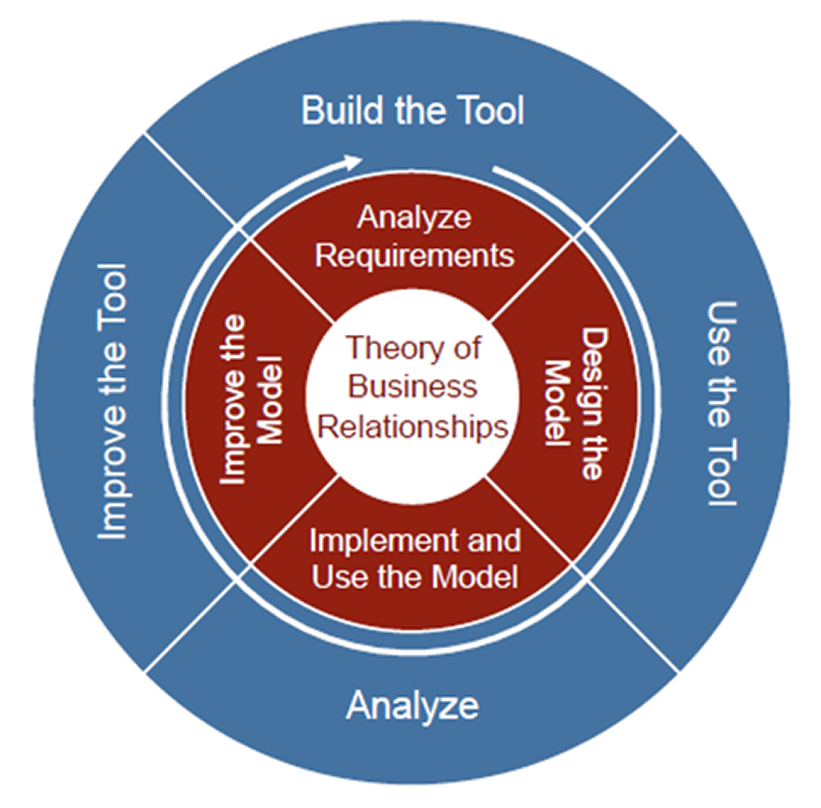

Based on our initial theory for integrating service quality and value co-creation (co-value) in the SaaS business relationships, we have developed a SaaS evaluation model. The model is intended to assist the service customer in selecting an appropriate SaaS system and provides the service provider and customer with a guide to monitor the service operation. The decisions related to both service selection and monitoring should be driven by the perceived co-value of the service provider and customer in establishing their business relationship. A two-cycle evolutionary approach is adopted in building our model (see Figure 1).

Figure 1: Evolutionary Cycles for the SaaS Evaluation Model

The inner cycle around the core theory lists the steps in defining and refining the SaaS evaluation model. We first analyze the requirements that the model should achieve from the perspectives of both the service customer and provider. We then design the model using the UML object-oriented design tool. The model is then implemented and used by developing an evaluation tool, which starts the evolution of the outer cycle.

The outer cycle focuses on the evolution of the evaluation tool, which can be used in various SaaS service areas. Based on the evaluation model, the tool is built and used in a particular service area.

As an example, we have used a prototype of the tool by simulating how it can assist in selecting SaaS in email services in the service-planning phase. Three steps are followed in the service selection procedure:

1. Build the experiential data. The experiential data are typically retrieved in service selection and updated in service monitoring. However, when we initially use the tool, there is no real experiential data. To assist in building the experiential data for the evaluation tool, an online survey was conducted to collect experiential data in the particular SaaS service area - a SaaS solution for email systems. This email survey was focused on the adoption of a specific SaaS email service, such as those provided by Google Mail and Microsoft Hotmail.

We undertook the email survey from June to July in 2009. The survey objectives were set as academic institutions all over the world that were recognized as successful adopters by Google and Microsoft. The invitation procedure was similar to the generic survey conducted earlier. The key questions in the email survey asked the priority of service attributes considered in service selection and the monitoring frequency in service operation. The results are used to build the experiential data for producing the selection and monitoring report.

2. Take inputs for service selection in email services. The service selection procedure takes inputs from both the service customer on the functional and non-functional requirements and the service provider by capturing the service offering description and/or SLA templates from the worldwide web.

From the service customer’s perspective, the evaluation tool collects the requirements from service customers. In general, the following information is taken as the input from the service customer:

-

general business motivation and business objectives for the adoption of an email service as provided by the customer organization

-

specific objectives to be achieved by adopting an email service system (ranking of these specific objectives, if applicable)

-

the service type (Ad-hoc, Defined, Managed, or Strategic) the customer believes is most appropriate for the service is then determined

-

estimate of the priority of the service attributes used in making the decision to adopt a SaaS system

-

estimate of the monitoring frequency of service attributes when using the SaaS system

-

IT governance frameworks or strategies used when selecting and monitoring the SaaS system

To assist the decision maker in determining the requirements on service quality, we chose the following twelve service attributes used for decision making of service selection and monitoring of service operation: Functionality, Security, Availability, Reliability, Usability, Efficiency, Sustainability, Adaptability, Cost, ROI (Return on Investment), Risk, and Continuity.

From the service provider’s perspective, the evaluation tool needs to determine if the service offerings are consistent with the service customer’s requirements collected in the previous step. In the email service area example, Google Apps for Education and Microsoft Live@edu are selected as the candidate service providers for the email system. Both applications provide email services for educational institutions. The input from the service provider includes the service terms and the initial version of SLAs, which can be captured from the provider’s websites.

3. Produce the service selection report. The selection report summarizes the information from both the service customer and provider, finds the potential problems such as incompleteness and inconsistencies with the views of other customers in the service area, and recommends the appropriate service candidates and service type in the business relationship between the service customer and provider. The selection report typically contains parts addressing the following concerns:

-

Introduction: the background section defines key terms, such as the service types and service attributes, introduced in the evaluation tool and outlines the report contents and major findings.

-

Comparisons: the tool compares the service customer’s input to the historical results as derived from surveys of existing customers that use the provider’s service. In our example, the customer’s input is compared with the experiential data collected from the email survey. In the comparisons, the tool detects potential issues the service customer may want to examine more closely, such as the priority of some attributes in customers input deviates significantly from the survey results.

-

Analysis and evaluation: The tool analyzes the inputs from the service customer and the service provider, and points out inconsistencies and incompleteness for decision-making. According to our evaluation model, four groups of service attributes can be directly related to the four service types: Functionality, Conformance quality attributes, Gap quality attributes, Value quality attributes.

-

Recommendations: Based on the analysis, the tool recommends the appropriate service type for the business relationship that should be established in the service delivery.

The update of the tool leads to the beginning of next cycle. The lessons learned in the development of a specific tool are also used to improve the model in the inner cycle.

Thus far we have developed a prototype of a tool for email SaaS services that might be used in academic institutions. The details of the design of the tool and the deployment of the prototype can be found here: http://gradworks.umi.com/NR/62/NR62880.html. Based on this prototype, we have introduced some enhancements to the evaluation model that incorporates the role of a broker.

Conclusion

In this paper, we discussed service quality management and value co-creation (co-value) in building the SaaS business relationships. In order to determine the co-value for both the service customer and provider, a specification of four service types (Ad-hoc, Defined, Managed, and Strategic) was defined based on maturity levels of the business relationships in SaaS delivery. This led to a conjecture that the intensification of the service type can be managed by the addition of quality measurement approaches. A web-based survey was conducted with a selected group of CIOs from service customer organizations to validate this conjecture. Four service attribute groups identified in the survey results can be consistently aligned with the incremental evolution of the four service types. The conjecture is used as a foundation for defining the SaaS evaluation model that helps service customers in selecting and monitoring SaaS systems in service planning and operation. Based on the model, a SaaS evaluation tool is built and used for the assistance of the SaaS adoption in a particular service area. In particular, a case study was run to assist the decision making of email service adoption.

The results of this research are important initial steps in building a better understanding of co-value in business relationships between the service customer and provider in SaaS delivery. Based on these studies, the following research work can be pursued in future: i) extending the use of the tool to other scenarios; ii) further investigations to assist in evolving the evaluation model; and iii) more conceptual surveys used as a tool to validate and improve the model.

Comments

Thanking

Thank you for writing a good article.