AbstractThe true method of knowledge is experiment.

William Blake

Poet, painter and inventor

“The Argument” (1788)

Startups searching for a business model face uncertainty. This research aims to demonstrates how B2B startups can use business experiments to discover and validate their business model's desirability quickly and cost-effectively. The research study follows a design science approach by focusing on two main steps: build and evaluate. We first created a B2B-Startup Experimentation Framework based on well-known earlier frameworks. After that, we applied the framework to the case of the German startup heliopas.ai. The framework consists of four steps (1) implementation of a measurement system, (2) hypothesis development and prioritization, (3) discovery, and (4) validation. Within its application, we conducted business experiments, including online and offline advertisements, as well as interviews. This research contributes in several ways to the understanding of how B2B-startups can use business experiments to discover and validate their business models: First, the designed B2B-Startup Experimentation Framework can serve as a guideline for company founders. Second, the results were used to improve the existing business model of the German B2B startup heliopas.ai. Finally, applying the framework allowed us to formulate design principles for creating business experiments. The design principles used in the study can be further tested in future studies.

1. Introduction

In recent years, a common approach and conventional wisdom has urged founders to create a business plan that describes the size of the opportunity, the targeted problem, and the planned solution (Blank, 2013). It assumes that the target market is known, and the business model is validated (Garvin, 2000). However, these conditions are often not met by startups, which leads to many startups failing when executing an assumption-based business model (Lynn et al., 1996).

To support founders in searching for a business model, frameworks were created with the idea of conducting experiments in business settings. Technological advances in the last decade have lowered market entry barriers and the cost of running business experiments dramatically (Kerr et al., 2014). This has made business experimentation more viable and simpler to execute. Moreover, characteristics specific to the B2B market influence how business experiments are designed. An implication for a B2B business is that more money is generated by fewer customers (Croll & Yoskovitz, 2013). This implies that it is more difficult to provide statistical significance because of small sample sizes. Additionally, the role of decider and user might not come from the same person as usual with consumers, making it harder for a startup to sell a product to a company (Croll & Yoskovitz, 2013). Thus, more research is needed to determine how B2B startups can specifically use business experiments, allowing founders to learn about the business model. Recently, the COVID-19 pandemic has limited personal contact with customers, and thus influenced the methodology of this research. These trends demand additional research in this field.

In line with Berglund, Dimov and Wennberg (2018), who call for more research resulting in practical insights for entrepreneurs, the goal of this research is twofold. First, the study provides empirical insights on the application of business experiments to the business model development process of B2B companies. Second, we investigate how to run and design these experiments. Berglund et al. (2018) recommended creating context-specific design principles in the form of pragmatic recommendations. Thus, this research focuses on extracting practical design principles that support entrepreneurs in improving their business experiment activities. This research takes a problem-solution approach aimed at extracting practical contributions for B2B startups, instead of focusing on enriching the existing theoretical body of literature. The study answers the following question: How can startups in a B2B market use business experiments to discover and validate the desirability of their business models quickly and cost-effectively?

To answer this question, we followed a two-step process proposed by March and Smith (1995), and tailored the research process by focusing on the case of a real life startup named heliopas.ai. The case of heliopas.ai suits this research well as it is searching for a business model that incorporates selling an application called WaterFox to farmers (a B2B context). The mission of the startup heliopas.ai is to provide farmers with accurate data about soil moisture combined with simple recommendations for more efficient field irrigation. The startup uses machine learning and multiple data sources such as satellite imagery and local weather databases to gather the data. No equipment or high-priced sensors are necessary to use the application WaterFox, an advantage deemed beneficial as it saves customers unnecessary costs and adoption efforts. A framework was created that will serve as a guideline to conduct tailored business experiments for heliopas.ai that consider the limitations of startups regarding time and money. These business experiments would help to discover and validate the desirability of their business model in a B2B market. As the startup is based in Karlsruhe, Germany, the initial market consisted of local farmers in Baden-Wuerttemberg and Rhineland-Palatinate.

This research proposes a B2B-Startup Experimentation Framework with a four-step solution that reduces uncertainty and improves a startup’s business model. The framework builds on existing processes and principles, and combines them in one comprehensive framework that serves as a guideline for conducting B2B business experiments. By applying the framework to the context of heliopas.ai, the researchers were able to evaluate the proposed framework’s applicability. Additionally during the research, the business and operations of heliopas.ai were adjusted due to the findings, resulting in better understanding of the suitability of channels, value proposition, customer jobs-to-be-done, customer segment, and product performance.

This paper is structured as follows. The next section provides a brief review of theories and frameworks used to develop the B2B-Startup Experimentation Framework. In section 3, we lay out the methodology. In the consecutive parts, we develop the framework and apply it to the startup heliopas.ai. Next, we summarize the findings and further elaborate in the discussion section. Finally, we discuss the limitations of the study, practical implications for startups and researchers, and conclude the paper.

2. Theoretical Framework

Discovery and Validation

Discovery marks the initial step in the search for a business model. The goal is to explore if the general direction of thought regarding a business model is correct and to gain more insights (Bland & Osterwalder, 2020). Discovery suits the early steps of experimentation. Since a startup operates under great uncertainty (Ries, 2011), decision making is done under ambiguity, with little to no knowledge about alternatives and consequences (Cooremans, 2012). Generally, validation activities ensure that customers’ needs and the defined requirements are met (Albers et al., 2017). The validation process of a business model determines and ensures a correct direction of thought, and also confirms findings from the discovery step (Bland & Osterwalder, 2020). Thus, validation becomes the second step in the search for a business model (Bland & Osterwalder, 2020).

Business Model and Risk Factors

According to Brown and Katz (2009), an early business model entails three risk factors: desirability, feasibility, and viability. Desirability shows the risk of a business model regarding the market, demand, communication, and distribution. Feasibility defines the risk when a business cannot access key resources, perform key activities, or find key partners (Brown & Katz, 2009). Viability denotes the risk that a business cannot generate sufficient revenue or requires too much cost to make a profit; that it won’t be viable (Bland & Osterwalder, 2020). This research focuses on reducing the desirability risk in business models. It therefore focuses on the following business model components: customer segments, value proposition, channel, and customer relationship. Additionally, we explore a revenue model in terms of a customer’s willingness to pay for heliopas.ai’s application offer.

Business Experiments

Business experiments attempt to take a scientific approach for generating insights into a company’s business model (Thomke, 2019). They reduce risk and uncertainty by yielding evidence regarding an underlying hypothesis (Bland & Osterwalder, 2020). Experiments can demonstrate a causal relationship by measuring the effect an action has on a situation (Hanington & Martin, 2012). Business experiments in startups are often run cheaply and quickly (Aulet, 2013). For this paper, business experiments can be distinguished based on how they are aligned with their purpose – discovery and validation – as in the previously provided definitions. Discovery experiments test if the general idea behind a concept is acceptable, intending to establish a proof-of-concept. Validation experiments are experiments with higher fidelity. They yield stronger evidence and require more resources, such as time, personnel or money.

Growth Hacking

Growth Hacking aims at fast and sustainable growth through activities in the area of market research, product development, and customer retention (Ellis, 2017). The Customer Acquisition Funnel is a core element of the Growth Hacking framework. It consists of five stages: acquisition, activation, retention, revenue, and referral (McClure, 2007). In the acquisition stage, the goal is to figure out through which channels users, customers, and visitors are coming from, in a way that results in value for a startup (McClure, 2007). Secondly, the activation element shows how many acquired users have a positive first impression of the product (McClure, 2007). Retention measures whether users keep using the product. The revenue stage measures customers’ willingness to pay, whereas the referral stage measures if users enjoy the product enough to recommend it to a friend (McClure, 2007).

Customer Development Process

The Customer Development Process by Blank and Dorf (2012) is an iterative, customer-focused approach in the search for a business model. It incorporates business experiments and consists of two steps: customer discovery and customer validation. Customer discovery aims at finding initial customers by deriving testable hypotheses that collect possible experiment designs (Blank & Dorf, 2012), and finally conducting the experiments. These initial experiments determine if the envisioned value proposition matches a targeted customer segment. In the next step, the proposed solution is presented to customers to learn if it serves customer needs, and to assess customer willingness to pay for it. Customer validation requires applying the business model that results from the previous step (Trimi & Berbegal-Mirabent, 2012). The goal is to test whether the business model is repeatable and scalable. This is done by running more quantitative, high-fidelity experiments and acquiring actual sales, which will show how money spent in sales and marketing can generate revenue.

Lean Startup

The Lean Startup aims to reduce waste while creating a business model. It has three key principles: to replace planning with experimentation, the ‘getting out of the building’ approach by Blank, and lastly, agile development (Blank, 2013). The experimentation process is described by the Build-Measure-Learn feedback loop consisting of three steps: build, measure, and learn. In the step build, it is essential to create a Minimal Viable Product (MVP) quickly after identifying the most important hypotheses (Ries, 2011). The goal of building an MVP is to identify the proposed solution’s potential (Kerr et al., 2014) and the target customers’ willingness to pay for it. The measure step aims at collecting data that can verify or falsify the hypotheses made about product quality, price, and costs (Ries, 2011). In the learn step, the goal is to learn about the investigated hypotheses from collected data. The learning process shows whether an underlying hypotheses can be verified or not, and indicates if the MVP is a viable solution to the customer problem (Ries, 2011).

Four-Step Iterative Cycle

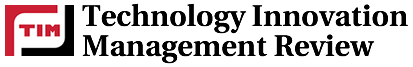

The Four-Step Iterative Cycle describes a structured procedure of business experimentation that undergoes the design, build, run, and analyze steps iteratively until it achieves desired outcomes. The first step design uses existing insights from observations and previous experiments to formulate testable hypotheses, and design suitable experiments (Thomke, 2003). In the build step, researchers build physical or virtual prototypes or models to conduct experiments (Thomke, 2003). The higher a prototype's fidelity and functionality, the stronger the generated evidence will be (Thomke, 2003). Subsequently, the experiment is run either in a more controlled laboratory setting or in a real-life setting, which produces higher external validity (Thomke, 2003). Finally, the results are analyzed by comparing them to an expected outcome. If the hypothesis addressed by the experiment is answered sufficiently, the experimentation cycle is stopped (Thomke, 2003). Otherwise, researchers reenter the design step with a modified experimental design, adjusted according to new insights gained in the process. Table 1 summarizes the presented frameworks and shows an initial comparison with the framework designed for this research.

Table 1. Framework comparison

3. Methodology

To create a business experimentation framework for B2B startups and gain insights on how B2B startups can use business experiments to discover and validate their business model quickly and efficiently, this research applied a two-step research process based on “design science” insights, as suggested by March and Smith (1995). The two-step research process in design science consists of a build and evaluate step, which can be summarized as follows.

The build step for this paper undertakes a literature review to shape a framework based on existing knowledge and practical experiences of respected practitioners (Thomke, 2003; Ries, 2011; Blank, 2012; Ellis, 2017), as well as previous research conducted in this field (Thomke, 2003). Practical knowledge is very popular among entrepreneurs. Although it is not grounded in theory itself, it is considered a valuable source of knowledge in this field.

Next, we apply the framework to the particular case of heliopas.ai, a real life startup that wants to improve its business model. This constitutes the evaluate step of the two-step process, which aims to show whether the created framework fulfills its purpose. Furthermore, the application allows researchers to deepen their knowledge about how to run business experiments empirically. Qualitative and quantitative data was collected during several business experiments. We used this empirical data to develop insights into heliopas.ai’s business model. In the conclusion, we describe applying the framework and conducting business experiments, which resulted in formulating design principles that serve as recommendations for conducting business experiments. The design principles can be regarded as a basis for future research that focuses on further investigating the value of business experiments.

4. Design and Application of the B2B-Startup Experimentation Framework

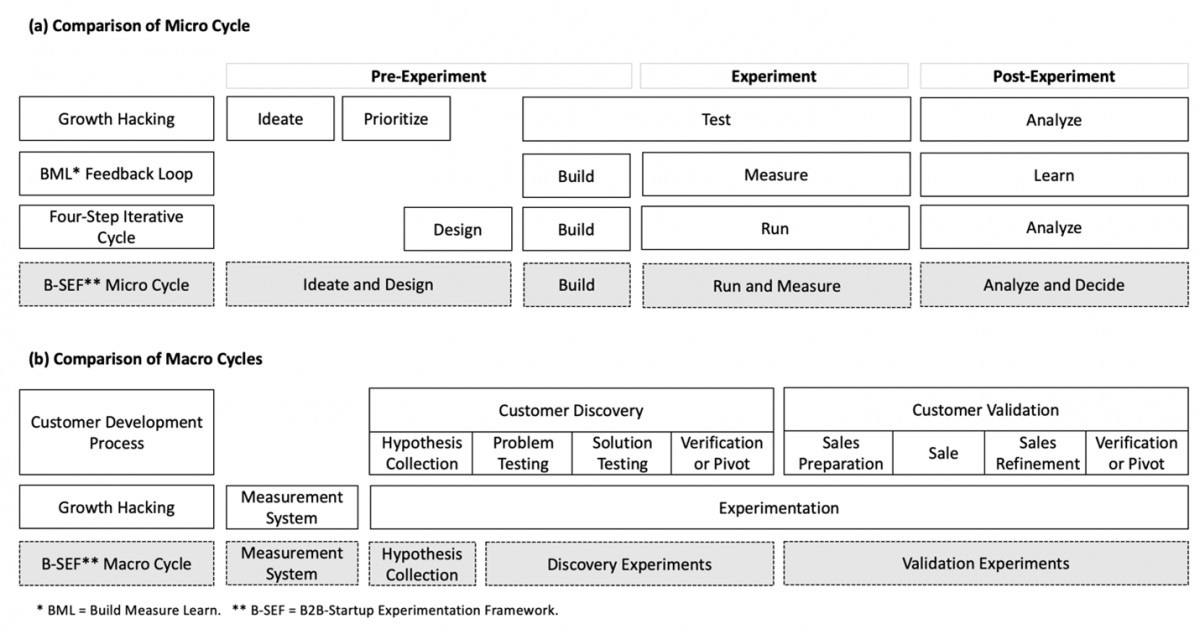

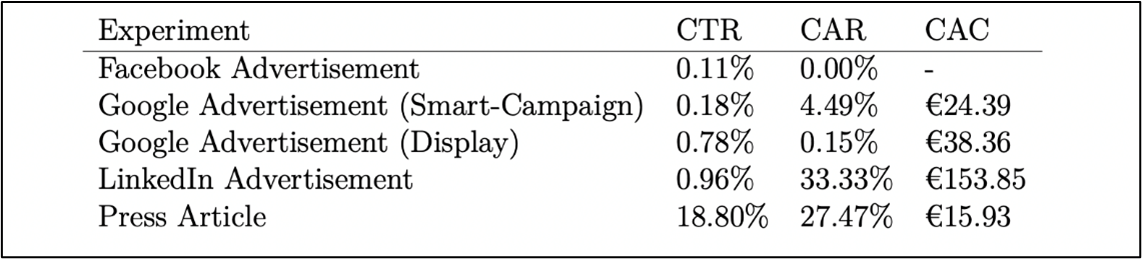

Based on the frameworks described in the theoretical part of this paper, we designed the B2B-Startup Experimentation Framework (B-SEF) and outline it in the following way. It consists of both a macro experimentation and micro experimentation framework (see Figure 1).

Figure 1. The B2B Startup Experimentation Framework (B-SEF)

The macro experimentation framework consists of four steps. First, it involves designing a simple measurement system to collect data on acquiring and retaining new customers. The idea of implementing such a measurement system originates from the Growth Hacking methodology. Applying it for this research was feasible because the use case startup already has a vision for its business model and technology integrated into a smartphone-application tested by selected customers. The data is used to calculate conversion rates and customer acquisition costs (CAC), as well as estimate customer lifetime value (CLV). Second, the Business Model Canvas (Osterwalder et al., 2010) is used to collect and prioritize hypotheses about the business.

Business experiments are conducted in the two steps discovery and validation (Bland & Osterwalder, 2020), thereby incorporating, specifically, discovery and validation experiments. By doing so, this research follows the recommendation of Blank and Dorf (2012) who suggest to treat the search for a business model as a two-step process of discovery and validation. In the discovery step, business researchers aim at gaining insights quickly and cost-effectively, as timing can be critical for a startup’s success. As emphasized by Ries (2011), the goal is to learn quickly about the business model’s desirability. In the validation step, researchers design experiments to gather more reliable evidence. By adding a control group and running experiments simultaneously, the effects of external variables will be reduced.

The micro experimentation framework is adapted from the Four-Step Iterative Cycle by Thomke (2003). All business experiments are conducted and presented in a structured manner by following a micro experimentation framework. The Four-Step Iterative Cycle is a core element of many frameworks and methods for startup experimentation. For instance, Brecht and his colleagues (2020) used the Four-Step Iterative Cycle to conduct experiments for platform business models. Ries (2011) and Blank and Dorf (2012) described similar cycles for experimentation in their Lean Startup and Customer Development Process.

The B-SEF was applied to the startup heliopas.ai to gain insights into its business model and to empirically evaluate the framework’s applicability. We note as important that restrictions of cost and time were present in this study, based on a budget of less than €100, and less than four weeks to design and run each experiment.

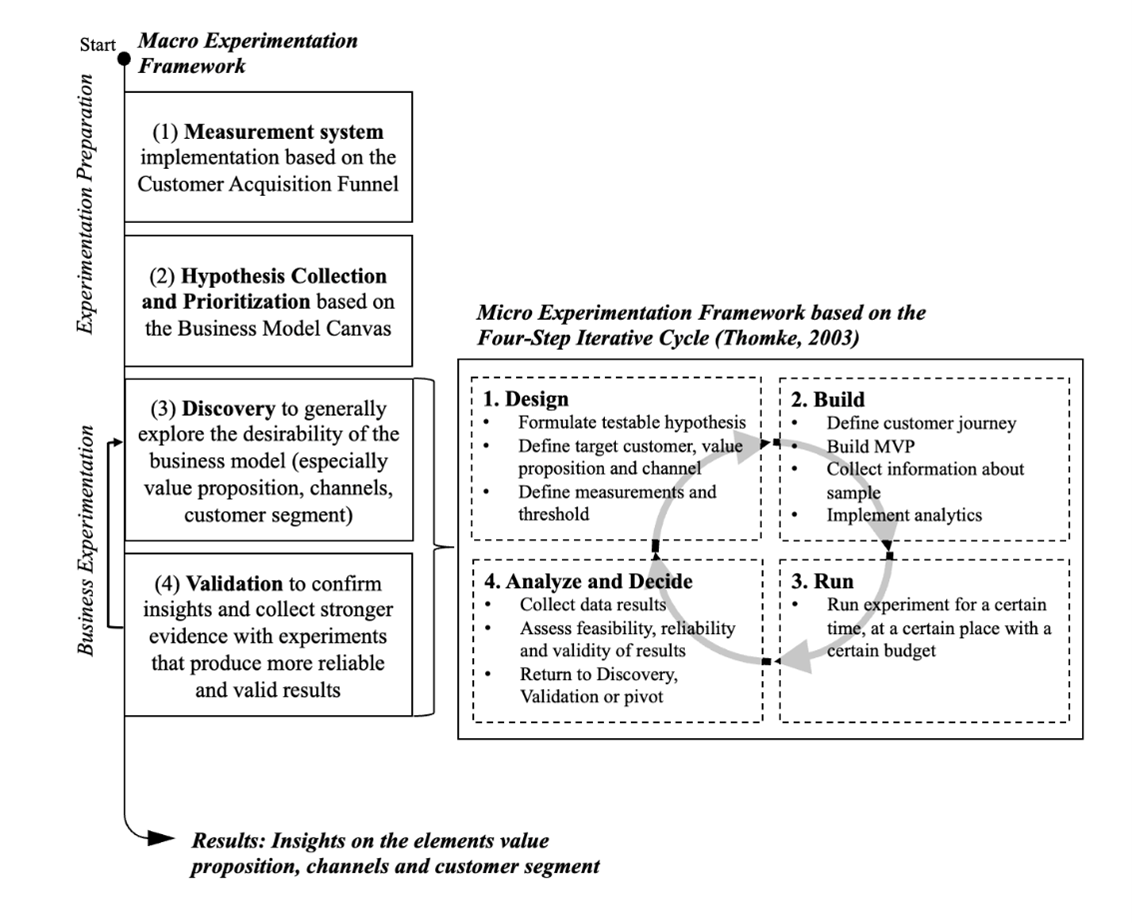

(1) Designing the Measurement System

Growth Hacking relies on experiments, and thus must collect data. A common way to determine how to design a measurement system that suits the purpose of data collection is to use the Customer Acquisition Funnel, described above. Consecutively, we designed the customer journey for heliopas.ai based on multiple metrics, which were defined and tracked. Table 2 provides an overview of these metrics, their definition, and their application in the Customer Acquisition Funnel stages.

Table 2. Tracked metrics in heliopas.ai’s measurement system

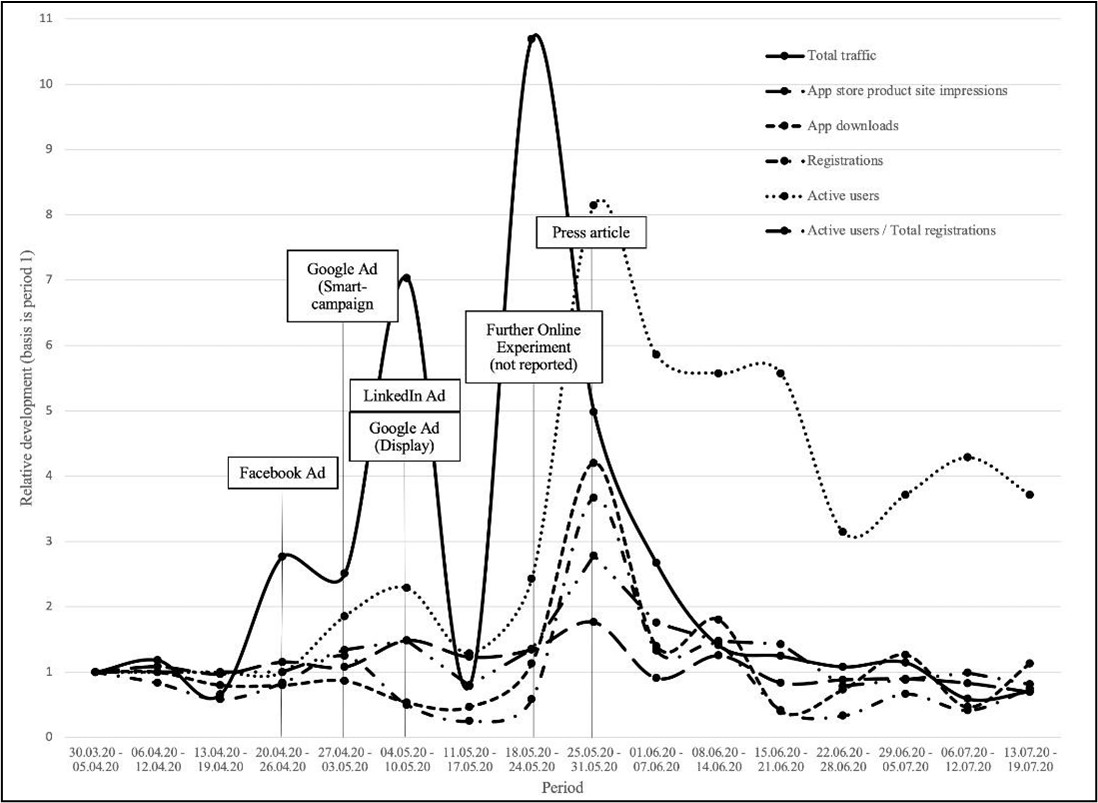

The acquisition stage consisted of metrics on total traffic generated by sites related to WaterFox. We extracted traffic data on the landing page from Facebook’s analytics. We tracked traffic on the WaterFox web landing-page with Google’s analytics.

The activation stage consisted of metrics from app store product site impressions in the Apple and Google Play app stores. Additionally, we tracked the downloads of the WaterFox application from both app stores and new user registrations in the WaterFox application. Likewise, App store product site impressions and downloads with the App Store Connect and Google Play Console.

For the retention stage, we defined three metrics. The users were split into the three categories, occasional user, standard user, and heavy user, based on frequency of signing into the application in the last seven days. When small errors in data had a high impact (for example, activities of developers in the application inflating the data), it was necessary to get data first-hand that was adaptable and transparent. The data from the retention stage was measured by building an Excel sheet that processed data from the startup’s database. This information was used to calculate daily retention metrics. Beneficial to this approach was that the researchers could manually filter users, since user names and further contact information were available.

The revenue stage consisted of metrics from paying users, who were paying to use the WaterFox application.

The referral stage was not tracked due to focus on the other stages. The collected data is presented in Figure 2. The three user categories are summarized as active users.

Figure 2. Development of Metrics of the Customer Acquisition Funnel

We used the absolute number metric for a certain period to calculate the conversion rate between customer journey phases via app store product site impressions to app downloads and app downloads to registrations. We used the conversion rate to calculate Customer Acquisition Costs (CAC), since the customer journey could not be tracked after customers leave the landing page and are referred to the app store.

(2) Hypothesis Collection and Prioritization

To collect and prioritize initial hypotheses, we used the common Business Model Canvas for eliopas.ai. Our focus on coming up with a desirable business model, drew upon the building blocks value proposition, customer segment, channels, customer relationships, and revenue model with greatest interest. We prioritized our hypothesis results in focusing on these channels according to the founders’ vision of selling their application online. This allowed them to distribute the app efficiently at low CAC and easily reach early adopters. Hence, in the following section, our attention will turn to experiments exploring the channels.

(3) Discovery Experiments

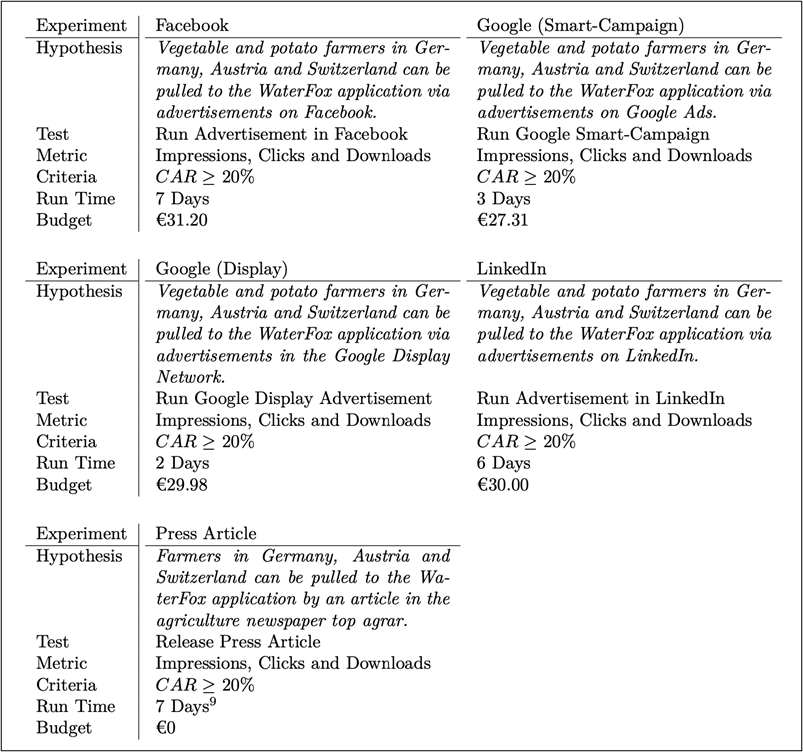

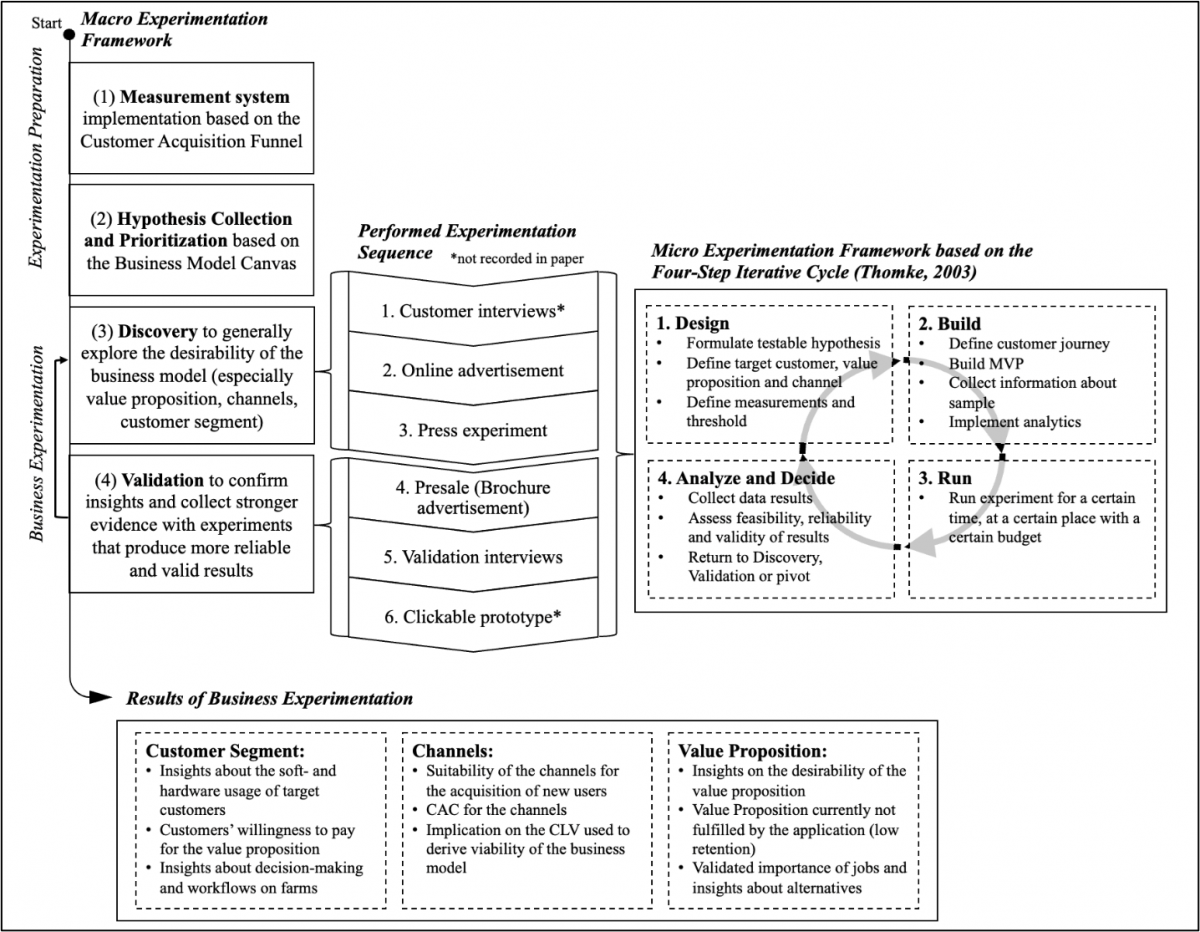

For each discovery experiment, we tracked the number of impressions, clicks on the advertisement leading to the landing page, and download-button clicks on the landing page to evaluate channel suitability. We calculated the Customer Activation Rate (CAR) from recorded data. CAR is defined as the number of active persons on the landing page, divided by the number of visitors. A threshold of 20%, common value for experiments in a startup environment, was defined for CAR. Additionally, the calculated CAC helps to evaluate the channel’s viability. Table 3 provides an overview of the discovery experiments conducted.

Table 3. Overview of conducted Discovery Experiments

Facebook advertisement experiment. The Facebook advertisement experiment analyzed whether customers were pulled to the WaterFox application via advertisements on Facebook. In the Facebook advertisement manager, the target customer was set to the current persona. A customer journey was designed, leading customers from an advertisement on landing page, to the app store, and finally to the application. The advertisement was run for seven days from April 22 to April 28, 2020, with a budget of €31.20 resulting in 4.557 impressions, 5 clicks on the advertisement, and 0 download-button clicks on the landing-page leading to a CAR of 0%, which was below the set threshold of 20%. Therefore, the hypothesis was falsified, and we decided to try a different channel in the next discovery experiment.

Google advertisement experiments. The Google advertisement experiment investigated whether customers are pulled to the WaterFox application via Google Ads. It ran as a smart campaign, which means that bidding, targeting, and ad creation were automated by Google Ads (Google, 2020). The landing-page from the Facebook advertisement experiment was reused, providing customers with an almost identical customer journey. The experiment ran for three days from April 27 to April 29, 2020, with a budget of €27.31, resulting in 48.211 impressions, 89 advertisement clicks, and 4 download-button clicks. This resulted in a CAR of 4.49%, which is also lower than the expected 20%. Hence the hypothesis was falsified. The Google Ads manager provides further information about the keyword performance, types of devices by targeted customers, and advertisement networks. The highest CAR in the Google Display Network was 11.11%. Due to this performance indication, we decided to conduct a second Google advertisement in the Google Display Network.

The Google Display Network experiment used a display advertisement that was only shown on certain websites and not in Google Search. The experiment ran for three days from May 6 to May 7, 2020, with a budget of €29.98, resulting in 69.100 impressions, 539 advertisement clicks, and 8 download-button clicks. This led to a CAR of 0.15%, which was below the set threshold of 20%. Therefore, the hypothesis was falsified. Due to this result, we decided to explore other online channels.

LinkedIn advertisement experiment. The LinkedIn advertisement experiment investigated whether customers were pulled to the WaterFox application via advertisements on LinkedIn. Hence, a LinkedIn advertisement was designed to test the hypothesis. The potential customers were sent to a landing page that revealed detailed information about the value proposition of the WaterFox-application. The target audience was defined as persons interested in agricultural topics. The target audience was set to males between the age of 25-34 years, meant to represent the startup’s current target customer persona. For this experiment, the customer journey from the previous experiment was again reused, with the only difference that now the customer started at the designed LinkedIn advertisement. Data was collected using LinkedIn’s campaign manager connected to the landing page by implementing a JavaScript tag to count conversions on the landing page. The LinkedIn experiment ran from May 5 until May 10, 2020, with a budget of €30 in Germany, Austria, and Switzerland, resulting in 6 clicks on the advertisement, and 2 download-button clicks. The results meet the expectation threshold with a CAR of 33.33%.

Press article experiment. The press article experiment tested whether customers could be pulled via an article in an agricultural newspaper into the WaterFox application. The hypothesis was formed by interviews that the startup conducted with customers during the business experimentation process. To measure all online activities on the landing page, we implemented Google Analytics and Google Tag Manager. To test the hypothesis, we sent a press release to several newspapers. To contact them, we used the network of the local startup accelerator for support. The press release contained important information about the WaterFox-application. Underneath the article, a link referred directly to the landing-page of the WaterFox application. Referrals from this link were tracked using Google Analytics. The newspaper top agar published the article on May 27, 2020. The press article resulted in 484 unique article reads, 91 landing-page visitors, and 25 download-button clicks. We estimated the cost of running this experiment based on the average price of using a writing service from a freelancer on the website upwork.com. This price quote leads to a CAR of 27.47%, which therefore meets our threshold expectations.

(4) Validation Experiments

After identifying suitable channels for the startup to acquire new users, our focus shifted from the discovery phase to the validation phase. In the following, we present two validation experiments, called brochure advertisement and validation interview.

Brochure experiment. The brochure advertisement experiment tested whether customers were willing to pay a price of €9 for the envisioned version of the WaterFox application and whether customers can be acquired via post. Again, a customer journey was set up. To test customer willingness to pay, two versions of the brochure were designed that differed in price. The control group received a brochure costing €3, while the test group received a €9 brochure. If the post-delivery channel was to be a suitable way of acquiring customers, it would be validated or refuted by running the validation interview experiment afterward.

To run this experiment, we needed the addresses of farmers to send the brochures. To solve this, we screened several websites and platforms for contacts. The sample size was 34, equally divided between test and control group. We sent the brochures to recipients at a cost of €47.73 for printing and sending. The brochure advertisement resulted in no responses from the contacted persons. A single follow-up validation interview revealed a possible reason for non-responses and disclosed valuable information for the business model.

Interview experiment. Besides trying to discover the cause for the non-responses to the brochures from potential customers, the goal of the interview experiment was to validate current understanding of problems and customer work involving farm irrigation management. More precisely, the experiment aimed to investigate the importance of certain tasks to target customers. Importance was measured by the frequency and effort of completing a task or enduring a burden. Furthermore, we investigated the hypothesis from previous experiments by assessing the suitability of the press channel.

Additionally, our goal was to investigate the current usage of digital products for target customers. Job inquiries were formulated as statements. Each statement included whether the customer actually worked in the job, how often it was completed in the last four weeks, and how much time and money were required. Additionally, interviewees were queried about several elements of their farm. This was done to place the provided information into the right context and to help avoid biased or misleading results. The interview was conducted via phone. We read out statements about jobs to the participants and recorded their responses. Of the 34 contacts available from the previous brochure experiment, we contacted 31. Five contacts agreed to an interview. The remaining showed an unwillingness to be interviewed, mostly due to time pressure and a high workload, because seasonal workers were limited due to the COVID-19 pandemic. The interviews were encoded to categorize the answers and systematically extract the results. We present the results from the validation experiments briefly in the following section.

5. Results

We gained insights into the company’s channels, value proposition, customer segment, and product performance. Table 3 summarizes the experimental results conducted in the discovery phase of the B-SEF. The collected data shows the Facebook and Google Ads did not reach the predetermined conversion threshold of 20%. In contrast, the LinkedIn advertisement and press article experiment exceeded the threshold. The cost of acquiring one registered user was €153.85 for LinkedIn and €4.13 for the press, based on the measurement system and data collected by running the experiments. These results were valuable for the startup to evaluate the desirability of its business model as they showed how the startup can acquire new customers and how much it costs.

Table 4. Results of Discovery Experiments.

The distributed brochures did not result in any acquisitions. The follow-up interviews conducted to investigate the unresponsiveness revealed that customers have limited available time and chose not to allocate it to reading a brochure. Additionally, the interviews yielded insights into software and hardware usage, as well as willingness to pay for the product. These insights helped to evaluate how desirable certain elements of the business model were, such as the value proposition. Figure 3 summarizes key findings of the B-SEF application in the startup heliopas.ai.

Figure 3. The B2B Startup Experimentation Framework (B-SEF) with Results

6. Discussion of Results and Proposed Design Principles

This research set out to assess the business model of heliopas.ai. By conducting a series of business experiments outlined by the B2B Startup Experimentation Framework (B-SEF) with an emphasis on the discovery and validation step of a startup, valuable insights were gained, resulting in improvements to the business model. With only a low budget of €118.49, experimentation showed the desirability of the business model and revealed the following results.

The discovery experiments were run at various times, which led to extraneous variables not remaining constant. This was an acceptable circumstance of the discovery experiments as they were aimed at establishing proof of the channel’s suitability in reaching target customers quickly. With a CAR of 27.47%, the press article experiment met our threshold expectations. A possible reason for this performance might be that farmers trust the information delivered by the newspaper top agrar, and are therefore more likely to visit the landing page and download the WaterFox application. If increased trust leads to more landing page visits and conversions, this article can also be used in future experiments as a reference.

The results of the brochure advertisement emphasize the importance of an existing channel to a customer. The post-delivery channel was not validated and does not provide answers about customer willingness to pay. The validation interview showed that interviewees use different hardware and software, and the difficulty of integrating the WaterFox application into an existing customer workflow. One interviewee stated that they were annoyed by documenting information in several IT systems. Thus, it was an obstacle for helipas.ai to sell its product to other businesses and find innovators.

The result of the interview experiment reveals the benefit of a qualitative approach. This experiment yielded significant insights into customers’ jobs, the suitability of the posting channel (used for the brochures), as well as customer willingness to pay. Since all interviewees were exposed to the same method, this was a within-subjects experiment (Price et al., 2017). It provided a high level of control over the extraneous variables, since participants in all control and test groups were the same. This was an advantage of the validation interview experiment’s design.

The novelty of the designed framework is that it was tailored to a real B2B startup. Though this in some ways might limit its generalizability, at the same time it also increases its suitability for this particular case of a startup company. By advising the process to begin with implementing a measurement system as the first step, this research stands in contrast to the business experimentation frameworks of Blank and Dorf (2012), Ries (2011), and Thomke (2003). Our measurement system was adapted from the Growth Hacking framework. With heliopas.ai, it was justifiable to incorporate it as the stage and the progress the founders were at with the startup was more advanced at the point of time of this research. Like research done by Brecht and colleagues (Brecht et al., 2019) that focused on business experiments for platform business models, the B-SEF focuses on B2B market business models. Compared to other frameworks, the B-SEF suggests concrete experiments.

Even with restricted generalizability, these business experiments and the design principles derived can provide other startup founders with ideas about designing their own experiments (see table 4). The design principles from this research were formulated using the following structure: “to achieve X in situation Y, something like Z will help” (Berglund et al., 2018). These design principles are currently at hypotheses stage based on applying the framework and require further empirical research to confirm and validate (or refute) them statistically.

References

Albers, A., Behrendt, M., Klingler, S., Reiß, N., & Bursac, N. 2017. Agile product engineering through continuous validation in PGE - Product generation engineering. Design Science, 3(5): 19. DOI: https://doi.org/10.1017/dsj.2017.5.

Aulet, B. 2013. Disciplined Entrepreneurship: 24 steps to a successful startup. Hoboken, New Jersey: Wiley.

Berglund, H., Dimov, D., & Wennberg, K. 2018. Beyond Bridging Rigor and Relevance: The three-body problem in entrepreneurship. Journal of Business Venturing Insights, 9: 87-91. DOI: https://doi.org/10.1016/j.jbvi.2018.02.001

Bland, D.J., & Osterwalder, A. 2020. Testing Business Ideas. Hoboken, New Jersey: John Wiley & Sons, Inc.

Blank, S., & Dorf, B. 2012. The Startup Owner's Manual: The Step-by-Step Guide for Building a Great Company. Pescadero, CA: K & S Ranch.

Blank, S. 2013. Why the lean start-up changes everything. Harvard Business Review.

Brecht, P., Niever, M., Kerres, R., & Hahn, C. 2020. Smart platform experiment cycle (SPEC): A validation process for digital platforms. Conference: Thirteenth International Tools and Methods of Competitive Engineering Symposium (TMCE 2020).

Brown, T., & Katz, K. 2009: Change by Design: How design thinking can transform organizations and inspire innovation (1st ed.), Harper Collins.

Cooremans, C. 2012. Investment in energy efficiency: Do the characteristics of investments matter? Energy Efficiency, 5: 497-518. DOI: https://doi.org/10.1007/s12053-012-9154-x.

Croll, A., & Yoskovitz, B. 2013. Lean Analytics: Use data to build a better startup faster. Sebastopol, CA: O’Reilly.

Ellis, S. 2017. Hacking Growth: How today's fastest-growing companies drive breakout success. New York: Crown Business.

Garvin, D.A. 2000. Learning in Action: A guide to putting the learning organization to work. Boston, MA: Harvard Business School Press.

Google. 2020. About smart display campaigns - Google ads help. Retrieved July 30, 2020. Accessed online: https://support.google.com/google-ads/answer/7020281?hl=en.

Hanington, B., & Martin, B. 2012. Universal Methods of Design: 100 ways to research complex problems, develop innovative ideas, and design effective solutions. Beverly, MA: Rockport Publishers.

Kerr, W.R., Nanda, R., & Rhodes-Kropf, M. 2014. Entrepreneurship as experimentation. Journal of Economic Perspectives, 28(3): 25-48. DOI: 10.1257/jep.28.3.25.

Lynn, G.S., Morone, J.G., & Paulson, A.S. 1996. Marketing and Discontinuous Innovation: The Probe and Learn Process. California Management Review, 38: 8-37. DOI: https://doi.org/10.2307/41165841.

March, S.T., & Smith, G.F. 1995. Design and natural science research on information technology. Decision Support Systems 15(4): 251-266. DOI: https://doi.org/10.1016/0167-9236(94)00041-2

McClure, D. 2007. Product Marketing for Pirates. Retrieved August 12, 2020, from Accessed online: https://500hats.typepad.com/500blogs/2007/06/internet-market.html.

Osterwalder, A., Pigneur, Y., & Clark, T. 2010. Business Model Generation: A handbook for visionaries, game changers, and challengers. Hoboken, NJ: Wiley.

Price, P., Jhangiani, R., Chiang, I.-C., Leighton, D., & Cuttler, C. 2017. Research Methods in Psychology (3rd ed.). DOI: https://dx.doi.org/10.17605/OSF.IO/HF7DQ

Ries, E. 2011. The Lean Startup: How today's entrepreneurs use continuous innovation to create radically successful businesses. New York: Crown Business.

Thomke, S.H. 2003. Experimentation Matters: Unlocking the potential of new technologies for innovation. Boston, MA: Harvard Business School Press.

Thomke, S.H. 2019. Experimentation Works: The surprising power of business experiments. Boston, MA: Harvard Business Review Press.

Trimi, S., & Berbegal-Mirabent, J. 2012. Business model innovation in entrepreneurship. International Entrepreneurship and Management Journal, 6: 449-495.

Keywords: B2B Startup, Business Experiment Design, Business Experiments, business model, Customer Development Process, Four-Step Iterative Cycle, Growth Hacking, lean startup