AbstractMany of life’s failures are people who did not realize how close they were to success when they gave up.

Thomas Edison (1874–1931)

Inventor and industrialist

The concept of a “living lab” is a relatively new research area and phenomenon that facilitates user engagement in open innovation activities. Studies on living labs show that the users’ motivation to participate in a field test is higher at the beginning of the project than during the rest of the test, and that participants have a tendency to drop out before completing the assigned tasks. However, the literature still lacks theories describing the phenomenon of drop-out within the area of field tests in general and living lab field tests in particular. As the first step in constructing a theoretical discourse, the aims of this study are to present an empirically derived taxonomy for the various factors that influence drop-out behaviour; to provide a definition of “drop-out” in living lab field tests; and to understand the extent to which each of the identified items influence participant drop-out behaviour. To achieve these aims, we first extracted factors influencing drop-out behaviour in the field test from our previous studies on the topic, and then we validated the extracted results across 14 semi-structured interviews with experts in living lab field tests. Our findings show that identified reasons for dropping out can be grouped into three themes: innovation-related, process-related, and participant-related. Each theme consists of three categories with a total of 44 items. In this study, we also propose a unified definition of “drop-out” in living lab field tests.

Introduction

Studies on open innovation have increasingly emphasized the role of individual users as collaborators in the innovation processes, and users are now considered one of the most valuable external sources of knowledge and a key factor for the success of open innovation (Jespersen, 2010). One of the more recent approaches of managing open innovation processes are living labs, where individual users are involved to co-create, test, and evaluate digital innovations in open, collaborative, multi-contextual, and real-world settings (Bergvall-Kareborn et al., 2009; Leminen et al., 2012; Ståhlbröst, 2008). A major principle within living lab research consists of capturing the real-life context in which an innovation is used by end users by means of a multi-method approach (Bergvall-Kåreborn et al., 2015; Schuurman, 2015). The process of innovation development in the living lab setting can happen in different phases, including exploration, design, implementation, test, and evaluation (Ståhlbröst, 2008). Nevertheless, testing a product, service, or system as one of the key components of living labs has been more focused than other phases of innovation development (Claude et al., 2017). Although we have not found any clear description or definition for the term “field test” (nor for the term “field trial”, which has been used interchangeably in some literature); Merriam-Webster Dictionary (2008), says that the aim of conducting a field test is “to test (a procedure, a product, etc.) in actual situations reflecting intended use”. In a living lab setting, a field test is a user study in which test users interact with an innovation in their real-life everyday use context while testing and evaluating it (Georges et al., 2016). What distinguishes living lab field tests from the traditional field tests is that the commercial maturity of the prototyped product, service, or system in traditional field tests are higher than in living lab field tests. On the other hand, living lab field tests are usually conducted in an open environment, in contrast to traditional field tests, where the testing is undertaken within a controlled situation. As digital innovations are one of the key aspects of living lab activities (Bergvall-Kåreborn et al., 2009), in this study, we focus on digital products, services, or systems as the focus of living lab field tests.

Involving individual users in the process of developing IT systems is a key dimension of open innovation that contributes positively to new innovations as well as system success, system acceptance, and user satisfaction (Bano & Zowghi, 2015; Leonardi et al., 2014; Lin & Shao, 2000). Although, when it comes to testing a digital innovation, it is recognized that keeping users motivated is more challenging than motivating them to start participating in a project in the first place (Ley et al., 2015; Pedersen et al., 2013; Ståhlbröst & Bergvall-Kåreborn, 2013). Consequently, users tend to drop out of a field test before the project or activity has ended, as the motivations and expectations of the users change over time (Georges et al., 2016). The reasons for dropping out might be due to internal factors relating to a participant’s decision to stop the activity or external environmental factors that caused them to terminate their engagement (O’Brien & Toms, 2008). These factors influence participants during all phases of the innovation process, from contextualization to test and evaluation (Habibipour et al., 2016).

A number of studies have acknowledged the importance of sustaining user engagement during living lab activities (Hess & Ogonowski, 2010; Leonardi et al., 2014; Ley et al., 2015). However, to the best of our knowledge, there are no studies investigating the drop-out rate in living lab field tests. Despite this, within the process of system development in a general level, the drop-out rate has usually been reported more than 50% (De Moor et al., 2010; Hess et al., 2008; Sauermann & Franzoni, 2013), which might have negative consequences for both the project outcome as well as the project organizers. Given that participating users already have a profound understanding and knowledge about the activity or project (Hess & Ogonowski, 2010), they are able to provide more useful and reliable feedback compared to the users who join the project when it is already underway (Ley et al., 2015). Moreover, once a project is underway, a trustful relationship between the users and developers has (presumably) already been established and this trust has been shown to be positively associated with project results (Carr, 2006; Jain, 2010). Also, having users drop out of projects is costly both in terms of time and resources as the developers need to train new users and provide them with adequate infrastructure, such as hardware, software, and communication technology (Ley et al., 2015). Finally, the issue of drop-out is important to the extent that Kobren and colleagues (2015) assert that, after dropping out, a participant provides no additional value for the project or activity.

Despite the above-mentioned consequences that drop-out has for the projects or activities, the literature lacks theories describing the phenomenon of user drop-out within the area of field tests in general and living lab field tests in particular. But, before such theories can be developed, we must define, categorize, and organize the factors that may influence drop-out behaviour. Such a taxonomy can form the basis of a theoretical framework in the area of this study. Accordingly, the aims of current study are: i) to provide an empirically grounded definition of a “drop-out” in living lab field tests; ii) to develop an empirically derived, comprehensive taxonomy for the various factors that influence drop-out behaviour in a living lab setting; and iii) to understand the extent to which each of the identified items influence the drop-out behaviour of participants in living lab field tests. To achieve this goal, we first extracted findings from our previous work on the topic to identify the factors that influence participant drop-out behaviour, and then the results were validated across 14 semi-structured interviews with experts in living lab field tests.

The article is organized as follows: After presenting the theoretical framework in the next section, we outline the methodology and research process we used to derive the taxonomy, followed by a summary of our previous work on this topic, from which we extracted an initial list of factors. After that, we present our definition of “drop-out” in living lab field tests. Then, we discuss the most influential factors on drop-out behaviour and present the taxonomy we developed to categorize drop-outs in living lab field tests. Finally, we discuss the implications and limitations of the study, and we offer some concluding remarks.

A Theoretical Framework

In this section, we develop a framework to identify and categorize various factors that influence participant drop-out behaviour in living lab field tests. There are different definitions and interpretations of the concept “living lab”; what is common in all viewpoints is that living labs integrate technical, social, and organizational structures that are related to various stakeholders and their perspectives (McNeese et al., 2000). Accordingly, living labs can be considered as socio-technical systems, as they focus on individuals, tasks, and structures, as well as technologies and the interactions between different stakeholders (Schaffers et al., 2009). Generally, socio-technical systems “comprise the interaction and dependencies between aspects such as human actors, organizational units, communication processes, documented information, work procedures and processes, technical units, human-computer interactions, and competencies” (Herrmann, 2009). Accordingly, socio-technical systems might consist of individual users, and technical, social, cultural, and organizational components (Pilemalm et al., 2007). When it comes to involving individual users in socio-technical systems, all technical features of the system, the social interactions supported by the system, and other socio-technical aspects influence how the users perceive and interpret their experiences and subsequently how they behave (Di Gangi & Wasko, 2009). In a study of participatory design for the development of socio-technical systems, Pilemalm and co-authors (2007) highlighted the importance of active user participation throughout the whole process of socio-technical system design and development, and they argued that this topic deserves more research.

The integration of social structures and perspectives with technical functions is the central problem in the design of socio-technical systems (Herrmann, 2009). In order to tackle this problem and integrate the impacts of socio-technical theory within the area of IT-system development, we found the technology–organization–environment (TOE) framework (Depietro et al., 1990) suitable because it has been developed to link information system innovation with contextual factors, and it enables us to address the development process of IT innovations in open systems (Chau & Tam, 1997). In addition, the TOE model has broad applicability and possesses explanatory power across a number of technological, industrial, and national/cultural contexts (Baker, 2012). Furthermore, it can be extended to settings for examining and explaining different innovation modes (Song et al., 2009).

Another benefit of using the TOE framework is that it is highly flexible and generic and, instead of explicitly specifying different variables in each category, it allows us to include different sources of influence on system design and development process (Zhu & Kraemer, 2005). Accordingly, the TOE framework provides a more holistic view of all three main aspects of a socio-technical system (i.e., the social, technical, and socio-technical aspects) and helps us to better meet the needs and expectations of the various involved stakeholders throughout the design and development process (Herrmann, 2009; Nkhoma et al., 2013).

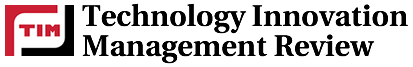

In this model, technology is associated with the technical aspects of a socio-technical system, which might be related to the platform, innovation, infrastructure, etc. Environment reflects more on the social aspects of a socio-technical system such as the real-life everyday use context, the personal context, and so on. And, finally, organization is associated with the socio-technical aspects of a socio-technical system in ways such as organizing the research, communication between different stakeholders, designing the processes, etc. Figure 1 shows the theoretical framework for this study.

Figure 1. Applying the technology–organization–environment (TOE) framework to socio-technical systems

Methodology

In order to better understand drop-out behaviour of field test participants, a detailed and systematic study needs to be conducted in the relevant natural setting using a qualitative approach (Kaplan & Maxwell, 2005). In contrast with a typology in which the categories are derived based on a pre-established theoretical framework, the taxonomies are emerged empirically within an inductive approach and are developed based on observed variables (Sokal & Sneath, 1963).

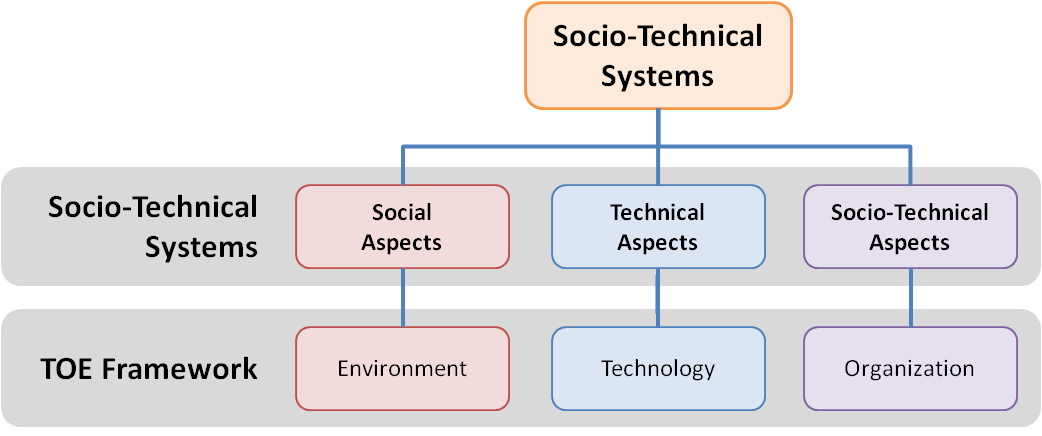

In order to develop a taxonomy for factors influencing drop-out behaviour, we used various qualitative data collection methods to gather information about the reasons participants drop-out of living labs field tests. In this study, we collected qualitative data in two major steps. First, we extracted from our previous studies on the topic possible reasons for participant drop-out in living lab field tests. Second, these findings were validated by interviewing experts in living lab field tests to increase and ensure the validity and trustworthiness of the collected data to build a taxonomy for drop-out. Figure 2 summarizes the research process for this study, which is explained in detail below.

Figure 2. Research process for this study

In the first major step, we explored documented reasons for participant drop-out in field tests. As recommended by Strauss and Corbin (1998), when a research field still lacks explicit boundaries between the context and phenomenon, reviewing previous literature can be used as a point of departure for further research. Accordingly, this phase of data collection followed the results of our earlier literature review on the topic (Habibipour et al., 2016). Through this process, we extracted 29 items (or factors) that influence participant drop-out behaviour. In addition, we identified other possible factors that may influence participant drop-out based on our results from four different field tests: three with imec.livinglabs in Belgium (Georges et al., 2016) and one with Botnia Living Lab in Sweden (Habibipour & Bergvall-Kåreborn, 2016). In these field tests, the data was collected by conducting an open-ended questionnaire as well as direct observation of drop-out behaviour. This also resulted in 42 items. After eliminating redundant or similar items, we ended up with 53 items.

In order to promote stronger interaction between research and practice and to obtain more reliable knowledge, social scientists recommend that studies should include different perspectives (Kaplan & Maxwell, 2005). This approach is in line with Van de Ven’s (2007) recommendation to conduct social research as “engaged scholarship”, which they define as:

“...a participative form of research for obtaining the different perspectives of key stakeholders (researchers, users, clients, sponsors, and practitioners) in studying complex problems. By involving others and leveraging their different kinds of knowledge, engaged scholarship can produce knowledge that is more penetrating and insightful than when scholars or practitioners work on the problem alone.”

Thus, in the second round of data collection, we conducted 14 semi-structured, open-ended interviews with experts in living lab field tests. Eight out of 14 interviewees were user researchers or panel managers from imec.livinglabs in Belgium and six of them were living lab researchers from Botnia living lab in Sweden. These experts were selected because they were not only familiar with living lab studies in general, but also because they had extensive work experience in relation to conducting living lab field tests. Although interviewing dropped-out participants could also provide us valuable information, their point of view is usually limited to one or two field tests, in contrast to the experts that have been involved in various field tests in different contexts. Moreover, in many cases, it was not feasible to ask them to be interviewed given that they had already dropped out of a previous research project, which is their right as voluntary participants.

The aim of these interviews was to validate the findings of the first data collection wave with the researchers, which enables us to find an initial structure for the proposed taxonomy. The results from this step were analyzed separately in two groups in each living lab (i.e., Botnia and imec.livinglabs). Accordingly, in this study, we used data, method, and investigator triangulation to increase the reliability as well as the validity of the results and greater support to the conclusions (Benbasat et al., 1987; Flick, 2009).

The topic guide of the interview consists of two major parts. First, the interviewees were asked open questions about living lab field tests, drop-out, and components of drop-out (e.g., definition, types of drop-out, main drop-out reasons, and when they consider a participant as dropped out). In the second part, we used the results of our previous studies as input for developing the interview protocol and, thus, the interviewees were given 53 cards, each one showing an identified factor. We asked the interviewees to put each of these cards into one of three main categories – not influential at all, somewhat influential, or extremely influential – according to their perceived extent of influence on participant drop-out in the living lab field tests they were involved in. They also were provided with some empty cards in case they wanted to add other items that were not presented in the pre-prepared 53 cards. This rating procedure was done to help us to understand the degree of importance of each item. Then, they were asked to group extremely influential items into coherent groups with a thematic relation. This helped us to identify the main categories of drop-out and enabled us to develop our taxonomy.

To analyze the data, we used qualitative coding because it is the most flexible method of qualitative data analysis (Flick, 2009) and allows researchers to build a theory through an iterative process of data collection as well as the data analysis (Kaplan & Maxwell, 2005). In this regard, developing a taxonomy is the first step in empirically building a theoretical foundation based on the observed factors (Stewart, 2008). This approach facilitates insight, comparison, and the development of the theory (Kaplan & Maxwell, 2005) and enables us to identify key concepts in order to develop an initial structure for the taxonomy for drop-out in living lab field tests. The coding was done in three major steps. First, all suggested categories by the interviewees assigned a unique code (e.g., “1” for interaction, “2” for timing issues, etc.). Second, redundant or similar categories were combined and assigned the same code (e.g., “timing” and “scheduling”, “interaction” and “communication”, etc.). Finally, considering our theoretical framework, all remaining categories were grouped into three main meaningful themes that represented the social, technical, and socio-technical aspects.

Building on Previous Studies

Our previous studies show that keeping users motivated and engaged is not an easy task as they may tend to drop out before completing the project or activity (Georges et al., 2016). However, to the best of our knowledge, there are few studies addressing reasons for participant drop-out in living lab field tests.

In Habibipour, Bergvall-Kareborn, and Ståhlbröst (2016), we carried out a comprehensive literature review to identify documented reasons for drop-out in information systems development processes. We identified some influential factors on drop-out behaviour and classified them into technical aspects, social aspects, and socio-technical aspects. When it comes to technical aspects, the main reasons that lead to drop-out are related to the performance of the prototype or interactions with it such as task complexity and usability problems (e.g., instability or unreliability of the prototype). Limitation of users’ resources, inadequate infrastructure, and insufficient technical support are other technical aspects. Regarding the social aspects, issues related to the relationship (either between users and developers or between participants themselves), lack of mutual trust, and inappropriate incentive mechanisms are the main reasons. In considering the socio-technical aspects, wrong user selection and privacy and security concerns were further highlighted in the studies. However, in the abovementioned study (Habibipour et al., 2016), the authors did not focus on a specific phase or type of activity, and extracted the drop-out reasons for all steps of the information systems development process such as ideation, co-design, or co-creation, and, finally, test and evaluation.

In Georges, Schuurman, and Vervoort (2016), we conducted a qualitative analysis within three living lab field tests to find factors that are related, either positively or negatively, to different types of drop-out during field tests. The field tests were carried out in living lab projects from iMinds living labs (now imec.livinglabs). The data in this study was collected via open questions in post-trial surveys of the field tests and an analysis of drop-out from project documents. The results of this study show that several factors related to the innovation, as well as related to the field trial setup, play a role in drop-out behaviour, including the lack of added value of the innovation and the extent to which the innovation satisfies the needs, the restrictions of test users’ time, and technical issues.

We have also attempted to present a user engagement process model that includes the variety of reasons for drop-out (Habibipour & Bergvall-Kåreborn, 2016). The presented model in this study is grounded on the results of a literature review as well as a field test with Botnia Living Lab. In this model, influential factors on drop-out behaviour are associated with:

- Task design, such as complexity and usability

- Scheduling, such as longevity

- User selection process, such as poor user selection with low technical skills

- User preparation, such as unclear or inaccessible guidelines

- Implementation and test process, such as inadequate infrastructure

- Interaction with the users, such as developers ignoring user feedback or lack of mutual trust

In total, we extracted 29 items from the first article (Habibipour et al., 2016), 27 items from the second article (Georges et al., 2016), and 15 items from the third article (Habibipour & Bergvall-Kåreborn, 2016). By removing redundant items, we ended up with 53 factors that influence drop-out behaviour. In this study, we build on these studies by addressing the need for a clear definition of “drop-out” as well as a taxonomy of possible reasons participants drop-out.

Proposed Definition

Defining the key concepts is the first step in constructing a theoretical discourse. The definition of “drop-out” in a living lab field test was developed by analyzing the interviewees’ responses to two open-ended questions: “When do you consider a participant as dropped out?” and “What is a drop-out in living lab field tests, according to you?”. The participants might only participate in the startup of the field test but they do not start to use the innovation. As one of the interviewees stated: “A drop-out is when they have started the test period and they are not fulfilling the assignments and complete the tasks. First of all, we need to think of the term ‘user’. If they drop out before they have actually used anything, can we call them a user drop-out or should we call them participants? If they are only participating in the startup but they have not started to use that innovation, we can’t really call them user. If they have downloaded or installed or used the innovation or technology, then they are users.” Drop-out behaviour can also occur when participants stop using the innovation because of motivational or technical reasons related to the innovation. For example, an interviewee mentioned: “…people have to install something and they don’t succeed because they don’t understand it or the innovation is not what they expected or wanted” Or: “During the field test, the longer the field test, the bigger the drop-out. I’ve seen it, why should I still use it?” And finally, drop-out behaviour can be related to the process in which the living lab field test is conducted. For instance, the participants might stop participating in the field test, after which point no further feedback is given. As an interviewee stated: “We, as researchers, must be particularly afraid of […] drop-out, when we cannot get feedback from test users”. Or as another interviewee stated: “People that do not fulfill the final task (mostly a questionnaire) are also considered as drop-outs for me.”

Our finding also supports the argument put forward by O’Brien & Toms (2008), who stated that user disengagement might be due to an internal decision of the participant to stop the activity or external environmental factors that caused them to terminate their engagement before completing the assigned tasks. Accordingly, the drop-out decision can be made consciously or subconsciously by the participants, but is characterized by the fact that they do not notify the field test organizers. For instance, an interviewee made a distinction between dropped out users and a defector which is someone who notifies the project that they will stop testing but will still give feedback: “If you stop testing and you keep on filling in the surveys (participating in research), you are not a dropped-out user. You need to make a distinction between those who stop testing the application and those who stop filling in the surveys...” What is common in all of the above-mentioned arguments is that the participants showed their initial interest to participate in the field test but they stopped performing the tasks before the field test has ended. Thus, we propose this definition for drop-out in living lab field test as:

“A drop-out during a living lab field test is when someone who has signed up to participate in the field test does not complete all the assigned tasks within the specified deadline.”

Within this definition, there are three important elements:

- The dropped-out participant signed up to participate. This element implies that the potential participants must be aware of what is expected of them.

- The dropped out participant did not complete all the assigned tasks. Depending on the type of field test, this could be the act of using/testing the innovation, but could also refer to participating in research steps (e.g., questionnaires, interviews, diary studies). This distinction was noted by Eysenbach (2005) in his law of attrition (drop-out attrition and non-usage attrition).

- The drop-out participant has not completed the tasks that were assigned to them within the specified deadline that was agreed upon.

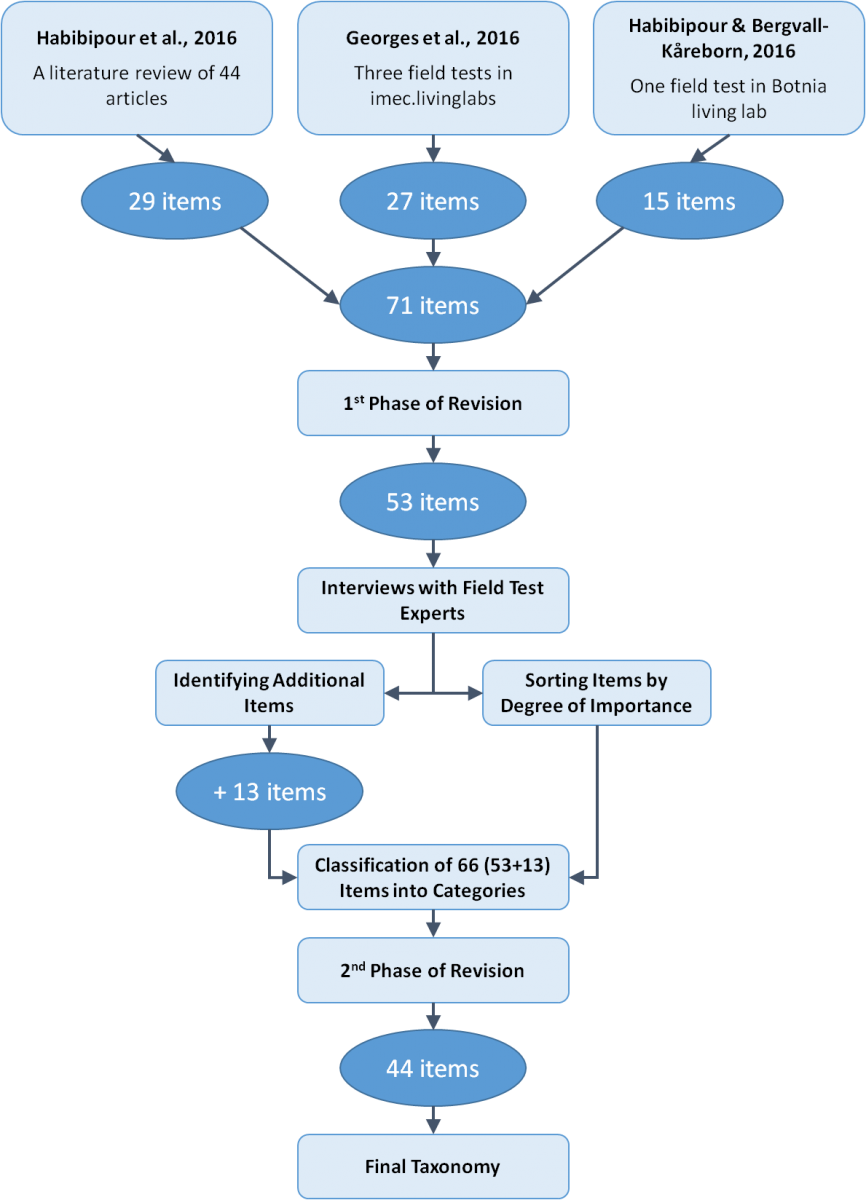

Proposed Taxonomy

Taxonomies are useful for research purposes: they can help leverage and articulate knowledge and are fundamental to organizing knowledge and information in order to refine information through standardized and consistent procedures (Stewart, 2008). As mentioned in the methodology section, the taxonomy we developed through this study is grounded by the results of a literature review article (Habibipour et al., 2016) as well as the results of four living lab field tests (Georges et al., 2016; Habibipour & Bergvall-Kåreborn, 2016). The findings of the previous steps were validated across 14 semi-structured interviews. This triangulation of the data strengthens the validity of the presented taxonomy and makes our results stronger and more reliable (Benbasat et al., 1987). The interviewees were asked to group the items that are extremely influential on participant drop-out into coherent groups. Our goal was to identify the categories most frequently suggested by the interviewees. Table 1 shows the categories of items that they initially suggested: B1 to B8 refers to the interviewees in imec.livinglabs in Belgium and S1 to S6 refers to the interviewees in Botnia Living Lab in Sweden. In some cases, an item can belong to different categories because the same item was interpreted differently by the interviewees. For example, two interviewees mentioned privacy and security concerns as “personal context” while six of them considered it under the category of “participants’ attitudes”. Thus, we decided to put the privacy and security concerns under the “participants’ attitudes” category.

An important outcome of this study was a refinement of the initial list of items that was extracted from our previous studies. During the interviews, we asked the interviewees to express their feelings about each item and add any comments or explanations. By doing so, we eliminated some items that were similar and combined the items that were very closely related. In this study, we were also interested in discovering other factors influencing drop-out behaviour that we were not aware of. Some of the interviewees also added additional items to our original list. As a result, we ended up with a revised list of items (44 items), which we used to develop the final taxonomy, which is shown in Table 2.

According to the results of the 14 interviews and based on the number of overlaps in the categories, we determined that nine categories was the most meaningful way of organizing the factors influencing drop-out behaviour in living lab field tests. The identified categories were grouped under three main themes: innovation-related factors, process-related factors, and participant-related factors. In the sub-sections that follow, we discuss each of these themes in detail.

Table 1. Summary of the categories suggested by the 14 interviewees (B1–B8; S1–S6)

|

Category |

B1 |

B2 |

B3 |

B4 |

B5 |

B6 |

B7 |

B8 |

S1 |

S2 |

S3 |

S4 |

S5 |

S6 |

Total |

|

Technological issues |

* |

* |

* |

* |

* |

* |

* |

* |

* |

* |

* |

* |

12 |

||

|

Perceived usefulness |

* |

* |

* |

* |

* |

* |

* |

* |

* |

|

* |

* |

|

|

11 |

|

Participants’ resource limitation |

* |

|

|

* |

* |

* |

|

* |

* |

* |

* |

* |

* |

|

10 |

|

Personal reasons/problems |

* |

* |

* |

* |

* |

* |

* |

* |

* |

9 |

|||||

|

Communication/interaction |

* |

* |

* |

* |

* |

* |

* |

* |

* |

9 |

|||||

|

Planning/task design |

* |

* |

* |

* |

* |

* |

* |

* |

* |

9 |

|||||

|

Timing |

* |

* |

* |

* |

* |

* |

* |

7 |

|||||||

|

Privacy and security |

* |

* |

* |

* |

* |

* |

6 |

||||||||

|

Personality/participants’ attitudes |

* |

* |

* |

* |

* |

* |

6 |

||||||||

|

Perceived ease of use |

* |

|

* |

* |

|

|

* |

* |

|

* |

|

|

|

|

6 |

|

Forgetfulness |

* |

* |

* |

3 |

Table 2. The proposed taxonomy of factors influencing participant drop-out behaviour in living lab field tests

|

Innovation-Related Factors |

||

|

Technological Problems |

Perceived Usefulness |

Perceived Ease of Use |

|

|

|

|

Process-Related Factors |

||

|

Task Design |

Interaction |

Timing |

|

|

|

|

Participant-Related Factors |

||

|

Participants’ Attitudes |

Personal Context |

Participants’ Resources |

|

|

|

Innovation-related factors

The categories under this theme are directly related to the innovation itself and reflect the technical aspects when it comes to socio-technical systems. Technological problems, perceived ease of use, and perceived usefulness were the categories that were most frequently suggested by the interviewees. The main innovation-related items (perceived usefulness and perceived ease of use) are in line with the technology acceptance model (Davis, 1985; Venkatesh et al., 2000). Whereas in the technology acceptance model the perceived usefulness and perceived ease of use are the main drivers of adoption, within our model, these two items can be related to drop-out behaviour.

- Technological problems: As the results of the interviews revealed to us, technological problems are among the most important innovation-related factors that play a role in drop-out behaviour. This category of items may be associated with, for example, trouble installing the innovation, a lack of flexibility or infrastructure compatibility issues, as well as issues with the stability and maturity of the (prototype) innovation.

- Perceived usefulness: This category highlights the importance of user needs. When the innovation does not meet the user’s needs, it might be difficult to maintain the same level of engagement throughout the lifetime of a field test. Also, a participant who is voluntarily contributing in a field test must be able to see the potential benefits of testing an innovation in their everyday life.

- Perceived ease of use: The complexity of the innovation might negatively influence participant motivation. When the innovation is too complex to use or is not easy to understand, participants may become confused or discouraged. Moreover, when the innovation is not sufficiently mature, it is difficult to keep the participants enthusiastically engaged in the field test.

Participant-related factors

Some of the suggested categories were directly related to the individuals and their everyday life contexts. This theme mainly reflects social aspects and environment when it comes to socio-technical systems. The participants’ attitudes or personalities, their personal contexts, and their resources can be classified under the participant-related theme.

- Participants’ attitudes: There are a number of items that can be subsumed under the category of participants’ attitudes. For example, this category includes situations in which the participants forget to participate, when the innovation does not meet their expectation, when they do not want to install something new on their device, when they do not like the concept or idea, and when they have concerns about their privacy or the security of their information.

- Everyday context: In a living lab approach, the users usually test innovations within in their own, real-life setting, therefore, challenges they face in their personal lives – unrelated to the testing activity – can negatively influence their motivation and may cause them to drop out of a field test.

- Participants’ resources: Limitations in participants’ resources can also influence the likelihood that they will drop out. They might either have not had enough time to be involved in the field test, or the project may place too many demands on their resources, such as requiring them to drain their own mobile batteries or consume part of their Internet data quota.

Process-related factors

These factors relate to the process of organizing a field test in a living lab setting where the socio-technical aspects are in focus. The three categories under this theme were associated with task design, interaction with the participants, and the timing of the field test.

- Task design: The results showed that there are various factors related to the design of the field test. For instance, when the tasks during the field test were not fun to accomplish, participants tend to drop out before completing the test. The interviewees also considered items such as a long gap between the field test’s steps or a lengthy field test as influential factors that might be associated with the task design in the field test.

- Interaction: Interaction and communication with the participants was considered as one of the most important categories of items that influence a participant’s decision to drop out. Unclear guidelines on how to do the tasks, lack of an appropriate technical support, and insufficient triggers to involve participants are some examples of the items in this category.

- Timing: Inappropriate timing of the field test (e.g., summer holiday) and too strict or inflexible deadlines are the most influential factors on drop-out behaviour in this category. When the participants are not able to participate in a field test at their own pace, they would prefer to not test the innovation any longer.

The developed taxonomy based on the resulted themes and categories is shown in Figure 3. The numbers in parentheses indicate the number of items under each category. The items under each of the themes and subcategories are shown in Table 2.

Figure 3. Overview of the proposed taxonomy of factors that influence participant drop-out in living lab field tests

The Most Influential Factors on Drop-Out Behaviour

In this study, we were also interested in knowing the extent to which each of the identified factors influences the drop-out behaviour of participants in living lab field tests. As mentioned in the methodology section, we asked the interviewees to group the items into three categories: not influential at all on drop-out behaviour (=1 point), somewhat influential on drop-out behaviour (=2 points), and extremely influential on drop-out behaviour (=3 points). They chose and categorized the items based on their previous experiences with various living lab field tests and, therefore, these results are from their own perspective. Next, we summed the item scores and sorted them from highest to lowest, as shown in Table 3. Using this method, the minimum possible total for a given item is 14 (14 x 1), and the maximum possible total is 42 (14 x 3). Our results show a range from 18 to 40, with the top-10 items having totals of 35 or higher.

Table 3. The degree of influence of each factor on participant drop-out behaviour in living lab field tests

|

|

Item |

Category |

Score |

|

1 |

I had trouble installing the innovation. |

Innovation |

40 |

|

2 |

There were problems with compatibility of the infrastructure. |

Innovation |

38 |

|

3 |

The timing of the project was inappropriate. |

Process |

38 |

|

4 |

I didn’t have enough time to be involved in this project. |

Participants |

37 |

|

5 |

The innovation was technologically too complex. |

Innovation |

36 |

|

6 |

There were no benefits for me in the field test. |

Participants |

36 |

|

7 |

The innovation was not stable. |

Innovation |

35 |

|

8 |

The innovation did not meet my needs. |

Innovation |

35 |

|

9 |

The innovation was not easy to understand. |

Innovation |

35 |

|

10 |

The innovation did not meet my expectations. |

Innovation |

35 |

|

11 |

There was no clear guideline on how to do the tasks. |

Process |

33 |

|

12 |

It was a lengthy project. |

Process |

33 |

|

13 |

I was not satisfied with the technical support during my involvement period. |

Process |

33 |

|

14 |

I forgot to test. |

Participants |

33 |

|

15 |

My personal context made me unable to keep on participating in the test. |

Participants |

33 |

|

16 |

It was unclear what was expected of me during the field test. |

Process |

32 |

|

17 |

I did not want to install something new on my device. |

Process |

32 |

|

18 |

The innovation was not reliable. |

Innovation |

32 |

|

19 |

The feeling of novelty associated with the innovation quickly disappeared. |

Participants |

32 |

|

20 |

The innovation did not stimulate my curiosity. |

Innovation |

32 |

|

21 |

The tasks during the field test were not fun to accomplish. |

Process |

31 |

|

22 |

I was not able to keep track of the project status over time. |

Process |

30 |

|

23 |

There were not enough instant support in the field test process. |

Process |

30 |

|

24 |

I had concerns about my privacy and the security of my information. |

Participants |

30 |

|

25 |

I did not have the feeling that my feedback was important. |

Process |

30 |

|

26 |

The guidelines and instructions were not easy to find or access. |

Process |

30 |

|

27 |

I didn’t like the concept/idea. |

Participants |

30 |

|

28 |

I have no faith in the future of this innovation (I wouldn’t use it and don’t think others would either). |

Innovation |

30 |

|

29 |

I was not satisfied with the way(s) in which I received feedback from the project. |

Process |

30 |

|

30 |

The tasks were not easy to understand. |

Process |

29 |

|

31 |

I was not able to participate in this project at my own pace (e.g., strict deadlines, inflexible). |

Process |

29 |

|

32 |

There was no mutual trust with the organizers of the field test. |

Process |

29 |

|

33 |

The innovation had too few functions. |

Innovation |

29 |

|

34 |

I had not been informed about the project’s details before the start of the field test. |

Process |

28 |

|

35 |

I couldn’t test where and when I wanted. |

Process |

28 |

|

36 |

I had to consume my own Internet data quota. |

Participants |

27 |

|

37 |

The innovation did not meet my technical expectations. |

Innovation |

26 |

|

38 |

I had to consume my own resources, such as battery power. |

Participants |

25 |

|

39 |

The external context made me unable to keep on participating in the test (e.g., not enough content, not enough users). |

Innovation |

25 |

|

40 |

There is no incentive/prize to participate (or it was too small). |

Participants |

25 |

|

41 |

I did not know what the organizers were planning to do with my feedback. |

Process |

25 |

|

42 |

The point of contact was unclear. |

Process |

24 |

|

43 |

I was not satisfied with the way(s) in which I sent my feedback to the project. |

Process |

23 |

|

44 |

I had to use my own device. |

Participants |

18 |

Of the top-10 items in Table 3, seven are related to the innovation itself. Problems related to installing the innovation; compatibility issues; the complexity, stability, and functionality of the innovation; usability; and ease of use are examples of items identified by the interviewees as the most influential innovation-related factors on participant drop-out behaviour. The implication of these findings is that, first and foremost, building sustainable user engagement in a living lab field test depends on careful consideration of issues that might emerge due to technological problems, perceived usefulness, and perceived ease of use. When the innovation does not work as promised, when it is not compatible with the participants’ device, when it is technologically complex, and when it doesn’t meet participants’ needs and expectation, it is very difficult to keep the users enthusiastically engaged in the living lab field test. Accordingly, participants may drop out in the very early stage of the field test without even having the opportunity to fully test the innovation.

Conclusion

In this study, our aim was to provide a definition for “drop-out” in living lab field tests; to develop an empirically derived, comprehensive taxonomy for the various influential factors on drop-out behaviour in a living lab field test; and to understand the extent to which each of the identified items influence participant drop-out behaviour. To develop a theoretical discourse about drop-out in field tests, there is a need to define, categorize, and organize possible influential factors on drop-out behaviour. Accordingly, we first identified factors influencing drop-out in the field tests from our previous research on the topic and then interviewed 14 experts who are experienced in the area of field testing in a living lab setting.

According to our definition, a dropped out participant in living lab field testing is someone who has signed up to participate in the field test but does not complete all the assigned tasks within the specified deadline. Our presented taxonomy revealed that the most influential reasons participants drop out were mainly related to the innovation, with additional factors being related to the process of the living lab field test and the participants themselves. Considering our suggested framework, each of the main three themes reflects a specific element of TOE framework. Technical aspects (i.e., technological problems, perceived ease of use, and perceived usefulness) are the group of items that are associated with technology in which the innovation plays the central role in this theme. When it comes to social aspects, environmental context such as participants’ everyday context and their resources are more influential on their drop-out behaviour. Accordingly, social aspects are more related to the participants and their personal context. Regarding the socio-technical aspects, the way of organizing the research, communication and interaction between different stakeholders, designing the tasks, and timing also influence drop-out behaviour. This group of factors is associated with the organizing the processes when it comes to TOE framework.

Our results also illustrate that the innovation-related items have greater influence on drop-out behaviour. We do not wish to imply that the process-related and participant-related items are not important. What we are arguing is that, when the innovation is not stable or is not sufficiently mature, or if it is not compatible with the participants’ device, or when it is technologically complex, the participants are not able to continue participating in the living lab field test even if they do not want to drop out. Reflecting on the argument made by O’Brien & Toms (2008) that drop-out might be due to an internal decision of the participant or external factors that caused them to drop out, our findings showed that external factors (technological, environmental, etc.) exert greater influence on participant drop-out behaviour. Our suggestion is that the innovation should be as stable, easy to understand, and easy to use as possible and, if it is not possible to sufficiently simplify the field test, it should be divided into sub-tests. Moreover, the organizers of a living lab field test must make the participants aware and well-informed about the whole process of the field test by providing them clear, accessible, and comprehensible guidelines before and during the field test.

The presented taxonomy can be put to work in several ways. For instance, we believe that there is a need for practical guidelines that describe what the organizers of a living lab field test should do and how they should act in order to keep participants motivated and reduce the likelihood of drop-out throughout the innovation process. This taxonomy can be used as a framework to develop such practical guidelines for the field test organizers. As another example, this taxonomy might be used as the basis to develop a standard post-test survey to identify the reasons for drop-out in various field tests in different living labs.

However, our study has limitations. One limitation was that the drop-out reasons were extracted based on the field tests in two living labs (namely, Botnia Living Lab and imec.livinglabs). Therefore, we might not be aware and well-informed about the way that other living labs set-up, organize, manage, and conduct their field tests, and consequently, the drop-out reasons could be different in those field tests due to many reasons such as cultural factors. Furthermore, drop-out behaviour might be associated with other influential factors such as degree of openness, number of participants, level of engagement, motivation type, activity type, and longevity of the field test. As an example, fixed and flexible deadlines to fulfill the assigned tasks might have resulted in different drop-out rates in a living lab field test (Habibipour et al., 2017). Therefore, these findings are tentative and might not be possible to generalize in different situations.

We also acknowledge the limitation of our study regarding the degree of influence of each factor on drop-out behaviour. On the one hand, although the initial list of these factors was extracted from the dropped out participants viewpoint in our previous studies, the degree of influence of each factor was only evaluated by the experts in the area of living lab field tests based on their real experiences and views. On the other hand, the total scores for the influential factors were quite close to each other and even overlapped for some items. Therefore, due to the small sample size of respondents, the results might be changed slightly if one more or one fewer respondent were included. In future studies, one way to overcome this limitation would be to use 5-point scoring in order to gain greater resolution of differences and to show averages instead of total score. Finally, future iterations of this work should triangulate our data by including the perspective of dropped-out participants in a more longitudinal study by utilizing different data collection methods and techniques (e.g., interviewing the dropped out users and even those who have completed the test). The limited number of interviews (14 interviewees) can also be considered as another limitation of this study, and further interviews would have made the information even richer.

This study also opens up avenues for future research. As O’Brien and Toms (2008) have introduced re-engagement as one of the core concepts of their user engagement process model. An interesting topic for further research would be to clarify how and why user motivation for engaging and staying engaged in a living lab field test differ. Moreover, it is important to study how the organizers of a field test can re-motivate dropped-out participants in order to re-engage them in that field test and to examine the benefits of doing so. Another opportunity for future research is to understand patterns of reasons that lead to drop-out behaviour, and thus different types of drop-outs. This would, however, require more respondents by using a more quantitative approach, given that such a large number of items scored by a small number of respondents might not provide robust results. Our hope is that the presented definition and the taxonomy can be used as a starting point for a theoretical framework in the area of this study.

Acknowledgements

A portion of this work was presented at the OpenLivingLabDays 2017 conference in Krakow, Poland, where it was awarded the Veli-Pekka Niitamo Prize for the best academic paper. The authors gratefully appreciate the Editor-in-Chief, Chris McPhee, for his valuable comments, suggestions, and guidelines to improve this article. We would also like to thank all user researchers and panel managers of Botnia Living Lab and imec.livinglabs for their contributions to this research. This work was funded by the European Commission in the context of the Horizon 2020 project Privacy Flag (Grant Agreement No. 653426), the Horizon 2020 project U4IoT (Grant Agreement No. 732078), and the Horizon 2020 project UNaLab (Grant Agreement No. 730052), which are gratefully acknowledged.

References

Baker, J. 2012. The Technology–Organization–Environment Framework. Information Systems Theory: 231–245. New York: Springer.

https://doi.org/10.1007/978-1-4419-6108-2_12

Bano, M., & Zowghi, D. 2015. A Systematic Review on the Relationship between User Involvement and System Success. Information and Software Technology, 58: 148–169.

https://doi.org/10.1016/j.infsof.2014.06.011

Benbasat, I., Goldstein, D. K., & Mead, M. 1987. The Case Research Strategy in Studies of Information Systems. MIS Quarterly, 11(3): 369–386.

https://doi.org/10.2307/248684

Bergvall-Kåreborn, B., Eriksson, C. I., Ståhlbröst, A., & Svensson, J. 2009. A Milieu for Innovation: Defining Living Labs. Paper presented at the ISPIM Innovation Symposium, December 6–9, 2009, New York.

Bergvall-Kåreborn, B., Eriksson, C., & Ståhlbröst, A. 2015. Places and Spaces within Living Labs. Technology Innovation Management Review, 5(12): 37–47.

http://www.timreview.ca/article/951

Bergvall-Kareborn, B., Holst, M., & Stahlbrost, A. 2009. Concept Design with a Living Lab Approach. In Proceedings of the 42nd Hawaii International Conference on System Sciences: 1–10. Piscataway, NJ: IEEE.

https://doi.org/10.1109/HICSS.2009.123

Carr, C. L. 2006. Reciprocity: The Golden Rule of IS-User Service Relationship Quality and Cooperation. Communications of the ACM, 49(6): 77–83.

https://doi.org/10.1145/1132469.1132471

Chau, P. Y. K., & Tam, K. Y. 1997. Factors Affecting the Adoption of Open Systems: An Exploratory Study. MIS Quarterly, 21(1): 1–24.

https://doi.org/10.2307/249740

Claude, S., Ginestet, S., Bonhomme, M., Moulène, N., & Escadeillas, G. 2017. The Living Lab Methodology for Complex Environments: Insights from the Thermal Refurbishment of a Historical District in the City of Cahors, France. Energy Research & Social Science, 32(Supplement C): 121–130. https://doi.org/10.1016/j.erss.2017.01.018

Davis, F. D. 1985. A Technology Acceptance Model for Empirically Testing New End-User Information Systems: Theory and Results. Doctoral dissertation. Cambridge, MA: Massachusetts Institute of Technology.

De Moor, K., De Pessemier, T., Mechant, P., Courtois, C., Juan, A., De Marez, L., & Martens, L. 2010. Evaluating a Recommendation Application for Online Video Content: An Interdisciplinary Study. In Proceedings of the 8th European Conference on Interactive TV and Video: 115–122. New York, NY, USA: ACM.

http://doi.org/10.1145/1809777.1809802

Depietro, R., Wiarda, E., & Fleischer, M. 1990. The Context for Change: Organization, Technology and Environment. The Processes of Technological Innovation, 199(0): 151–175.

Di Gangi, P. M., & Wasko, M. 2009. The Co-Creation of Value: Exploring User Engagement in User-Generated Content Websites. Proceedings of JAIS Theory Development Workshop. Sprouts: Working Papers on Information Systems, 9(50).

Eysenbach, G. 2005. The Law of Attrition. Journal of Medical Internet Research, 7(1): e11.

https://doi.org/10.2196/jmir.7.1.e11

Flick, U. 2009. An Introduction to Qualitative Research. Thousand Oaks, CA: Sage Publications.

Georges, A., Schuurman, D., & Vervoort, K. 2016. Factors Affecting the Attrition of Test Users During Living Lab Field Trials. Technology Innovation Management Review, 6(1): 35–44.

http://timreview.ca/article/959

Habibipour, A., & Bergvall-Kåreborn, B. 2016. Towards a User Engagement Process Model in Open Innovation. Paper presented at the ISPIM Innovation Summit, December 4–7, 2016, Kuala Lumpur.

Habibipour, A., Bergvall-Kareborn, B., & Ståhlbröst, A. 2016. How to Sustain User Engagement over Time: A Research Agenda. Paper presented at the 22nd Americas Conference on Information Systems, August 11–14, 2016, San Diego.

Habibipour, A., Padyab, A., Bergvall-Kåreborn, B., & Ståhlbröst, A. 2017. Exploring Factors Influencing Participant Drop-Out Behavior in a Living Lab Environment. In Proceedings of the 2017 Scandinavian Conference on Information Systems: 28–40. New York: Springer.

https://doi.org/10.1007/978-3-319-64695-4_3

Herrmann, T. 2009. Systems Design with the Socio-Technical Walkthrough. In B. Whitworth & A. de Moor (Eds.), Handbook of Research on Socio-Technical Design and Social Networking Systems: 336–351. London: Information Science Reference (IGI Global).

http://doi.org/10.4018/978-1-60566-264-0

Hess, J., Offenberg, S., & Pipek, V. 2008. Community Driven Development As Participation? Involving User Communities in a Software Design Process. In Proceedings of the 10th Conference on Participatory Design: 31–40. Indianapolis, IN: Indiana University.

Hess, J., & Ogonowski, C. 2010. Steps Toward a Living Lab for Socialmedia Concept Evaluation and Continuous User-Involvement. In Proceedings of the 8th International Interactive Conference on Interactive TV and Video: 171–174. New York: ACM.

https://doi.org/10.1145/1809777.1809812

Jain, R. 2010. Investigation of Governance Mechanisms for Crowdsourcing Initiatives. Paper presented at the 16th Americas Conference on Information Systems, August 12–15, 2010, Lima, Peru.

Jespersen, K. R. 2010. User-Involvement and Open Innovation: The Case of Decision-Maker Openness. International Journal of Innovation Management, 14(03): 471–489.

https://doi.org/10.1142/S136391961000274X

Kaplan, B., & Maxwell, J. A. 2005. Qualitative Research Methods for Evaluating Computer Information Systems. In J. G. Anderson & C. E., Aydin (Eds.), Evaluating the Organizational Impact of Healthcare Information Systems: 30–55. Springer, New York, NY.

Kobren, A., Tan, C. H., Ipeirotis, P., & Gabrilovich, E. 2015. Getting More for Less: Optimized Crowdsourcing with Dynamic Tasks and Goals. In Proceedings of the 24th International Conference on World Wide Web: 592–602. New York: ACM.

https://doi.org/10.1145/2736277.2741681

Leminen, S., Westerlund, M., & Nyström, A.-G. 2012. Living Labs as Open-Innovation Networks. Technology Innovation Management Review, 2(9): 6–11.

http://timreview.ca/article/602

Leonardi, C., Doppio, N., Lepri, B., Zancanaro, M., Caraviello, M., & Pianesi, F. 2014. Exploring Long-Term Participation within a Living Lab: Satisfaction, Motivations and Expectations. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction: 927–930. New York: ACM.

http://doi.org/10.1145/2639189.2670242

Ley, B., Ogonowski, C., Mu, M., Hess, J., Race, N., Randall, D., Rouncefield, & Wulf, V. 2015. At Home with Users: A Comparative View of Living Labs. Interacting with Computers, 27(1): 21–35.

https://doi.org/10.1093/iwc/iwu025

Lin, W. T., & Shao, B. B. 2000. The Relationship between User Participation and System Success: A Simultaneous Contingency Approach. Information & Management, 37(6): 283–295.

https://doi.org/10.1016/S0378-7206(99)00055-5

McNeese, M. D., Perusich, K., & Rentsch, J. R. 2000. Advancing Socio-Technical Systems Design Via the Living Laboratory. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 44(12): 2-610-2-613.

https://doi.org/10.1177/154193120004401245

Nkhoma, M. Z., Dang, D. P., & De Souza-Daw, A. 2013. Contributing Factors of Cloud Computing Adoption: A Technology-Organisation-Environment Framework Approach. In Proceedings of the European Conference on Information Management & Evaluation: 180–189. Reading, UK: ACPI.

O’Brien, H. L., & Toms, E. G. 2008. What is User Engagement? A Conceptual Framework for Defining User Engagement with Technology. Journal of the American Society for Information Science and Technology, 59(6): 938–955.

https://doi.org/10.1002/asi.20801

Pedersen, J., Kocsis, D., Tripathi, A., Tarrell, A., Weerakoon, A., Tahmasbi, N., Xiong, J., Deng, W., Oh, O., & de Vreede, G.-J. 2013. Conceptual Foundations of Crowdsourcing: A Review of IS Research. In Proceedings of the 46th Hawaii International Conference on System Sciences (HICSS): 579–588. Piscataway, NJ: IEEE.

https://doi.org/10.1109/HICSS.2013.143

Pilemalm, S., Lindell, P.-O., Hallberg, N., & Eriksson, H. 2007. Integrating the Rational Unified Process and Participatory Design for Development of Socio-Technical Systems: A User Participative Approach. Design Studies, 28(3): 263–288.

https://doi.org/10.1016/j.destud.2007.02.009

Sauermann, H., & Franzoni, C. 2013. Participation Dynamics in Crowd-Based Knowledge Production: The Scope and Sustainability of Interest-Based Motivation. SSRN.

https://doi.org/10.2139/ssrn.2360957

Schaffers, H., Merz, C., & Guzman, J. G. 2009. Living Labs as Instruments for Business and Social Innovation in Rural Areas. In Proceedings of the 2009 IEEE International Technology Management Conference (ICE), 1–8. Piscataway, NJ: IEEE.

https://doi.org/10.1016/j.destud.2007.02.009

Schuurman, D. 2015. Bridging the Gap between Open and User Innovation? Exploring the Value of Living Labs as a Means to Structure User Contribution and Manage Distributed Innovation. Doctoral dissertation. Ghent, Belgium: Ghent University.

Sokal, R. R., & Sneath, P. H. 1963. Principles of Numerical Taxonomy. New York: W. H. Freeman.

Song, G., Zhang, N., & Meng, Q. 2009. Innovation 2.0 as a Paradigm Shift: Comparative Analysis of Three Innovation Modes. Paper presented at the 2009 International Conference on Management and Service Science, September 20–22, 2009, Wuhan, China.

https://doi.org/10.1109/ICMSS.2009.5303100

Ståhlbröst, A. 2008. Forming Future IT – The Living Lab Way of User Involvement. Doctoral dissertation. Luleå, Sweden: Luleå University of Technology.

Ståhlbröst, A., & Bergvall-Kåreborn, B. 2013. Voluntary Contributors in Open Innovation Processes. Managing Open Innovation Technologies: 133–149. Berlin, Heidelberg: Springer.

https://doi.org/10.1007/978-3-642-31650-0_9

Stewart, D. 2008. Building Enterprise Taxonomies. Charleston, SC: BookSurge Publishing.

Strauss, A., & Corbin, J. 1998. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Thousand Oaks, CA: Sage Publications.

Van de Ven, A. H. 2007. Engaged Scholarship: A Guide for Organizational and Social Research. Oxford: Oxford University Press.

Venkatesh, V., & Davis, F. D. 2000. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Management Science, 46(2): 186–204.

https://doi.org/10.1287/mnsc.46.2.186.11926

Zhu, K., & Kraemer, K. L. 2005. Post-Adoption Variations in Usage and Value of E-Business by Organizations: Cross-Country Evidence from the Retail Industry. Information Systems Research, 16(1): 61–84.

https://doi.org/10.1287/isre.1050.0045

Keywords: drop-out, field test, Living lab, taxonomy, user engagement, user motivation